Interpretable Machine Learning: Bringing Data Science Out of the “Dark Age” (Recipient of the 2022 AAAI Squirrel Award for for Artificial Intelligence for the Benefit of Humanity)

Presenters

Cynthia Rudin (Duke University)

Thu, February 24 11:30 PM - Fri, February 25 12:30 AM (+00:00)

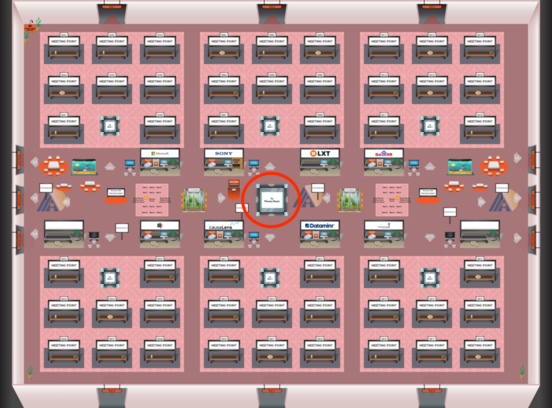

Thu, February 24 11:30 PM - Fri, February 25 12:30 AM (+00:00) Red Plenary Room, Blue Plenary Room

Red Plenary Room, Blue Plenary Room

Add to Calendar

Apple

Google

iCal File

Microsoft 365

Outlook.com

Yahoo

Interpretable Machine Learning: Bringing Data Science Out of the “Dark Age” (Recipient of the 2022 AAAI Squirrel Award for for Artificial Intelligence for the Benefit of Humanity)

Abstract: With widespread use of machine learning, there have been serious societal consequences from using black box models for high-stakes decisions in criminal justice, healthcare, financial lending, and beyond. Interpretability of machine learning models is critical when the cost of a wrong decision is high. Throughout my career, I have had the opportunity to work with power engineers, doctors, and police detectives. Using interpretable models has been the key to allowing me to help them with important high-stakes societal problems. Interpretability can bring us out of the “dark” age of the black box into the age of insight and enlightenment.

Bio

Cynthia Rudin is a professor of computer science and engineering at Duke University, and directs the Interpretable Machine Learning Lab. Previously, Prof. Rudin held positions at MIT, Columbia, and NYU. She holds degrees from the University at Buffalo and Princeton. She is the recipient of the 2022 Squirrel AI Award for Artificial Intelligence for the Benefit of Humanity from AAAI. She is also a three-time winner of the INFORMS Innovative Applications in Analytics Award, and a fellow of the American Statistical Association and the Institute of Mathematical Statistics. Her goal is to design predictive models that people can understand.

Cynthia Rudin is a professor of computer science and engineering at Duke University, and directs the Interpretable Machine Learning Lab. Previously, Prof. Rudin held positions at MIT, Columbia, and NYU. She holds degrees from the University at Buffalo and Princeton. She is the recipient of the 2022 Squirrel AI Award for Artificial Intelligence for the Benefit of Humanity from AAAI. She is also a three-time winner of the INFORMS Innovative Applications in Analytics Award, and a fellow of the American Statistical Association and the Institute of Mathematical Statistics. Her goal is to design predictive models that people can understand.