Safety and Robustness for Deep Learning with Provable Guarantees (AAAI-22 Invited Talk)

Presenters

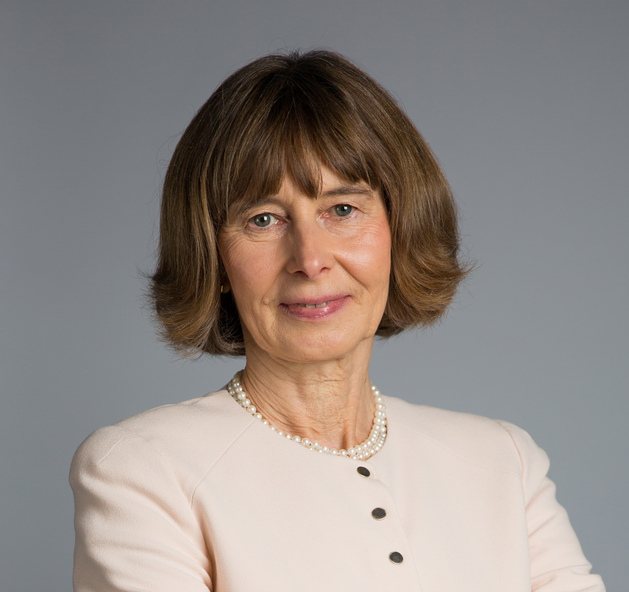

Marta Kwiatkowska (University of Oxford)

Sat, February 26 3:30 PM - 4:30 PM (+00:00)

Sat, February 26 3:30 PM - 4:30 PM (+00:00) Red Plenary Room, Blue Plenary Room

Red Plenary Room, Blue Plenary Room

Add to Calendar

Apple

Google

iCal File

Microsoft 365

Outlook.com

Yahoo

Safety and Robustness for Deep Learning with Provable Guarantees (AAAI-22 Invited Talk)

Abstract: Computing systems are becoming ever more complex, with automated decisions increasingly often based on deep learning components. A wide variety of applications are being developed, many of them safety-critical, such as self-driving cars and medical diagnosis. Since deep learning is unstable with respect to adversarial perturbations, there is a need for rigorous software development methodologies that encompass machine learning components. This lecture will describe progress with developing automated certification techniques for learnt software components to ensure safety and adversarial robustness of their decisions, including discussion of the role played by Bayesian learning and causality.

Bio

Marta Kwiatkowska is Professor of Computing Systems and Fellow of Trinity College, University of Oxford. She is known for fundamental contributions to the theory and practice of model checking for probabilistic systems, and is currently focusing on safety, robustness and fairness of automated decision making in Artificial Intelligence. She led the development of the PRISM model checker (www.prismmodelchecker.org), which has been adopted in diverse fields, including wireless networks, security, robotics, healthcare and DNA computing, with genuine flaws found and corrected in real-world protocols. Her research has been supported by two ERC Advanced Grants, VERIWARE and FUN2MODEL, EPSRC Programme Grant on Mobile Autonomy and EPSRC Prosperity Partnership FAIR. Kwiatkowska won the Royal Society Milner Award, the BCS Lovelace Medal and the Van Wijngaarden Award, and received an honorary doctorate from KTH Royal Institute of Technology in Stockholm. She is a Fellow of the Royal Society, Fellow of ACM and Member of Academia Europea.

Marta Kwiatkowska is Professor of Computing Systems and Fellow of Trinity College, University of Oxford. She is known for fundamental contributions to the theory and practice of model checking for probabilistic systems, and is currently focusing on safety, robustness and fairness of automated decision making in Artificial Intelligence. She led the development of the PRISM model checker (www.prismmodelchecker.org), which has been adopted in diverse fields, including wireless networks, security, robotics, healthcare and DNA computing, with genuine flaws found and corrected in real-world protocols. Her research has been supported by two ERC Advanced Grants, VERIWARE and FUN2MODEL, EPSRC Programme Grant on Mobile Autonomy and EPSRC Prosperity Partnership FAIR. Kwiatkowska won the Royal Society Milner Award, the BCS Lovelace Medal and the Van Wijngaarden Award, and received an honorary doctorate from KTH Royal Institute of Technology in Stockholm. She is a Fellow of the Royal Society, Fellow of ACM and Member of Academia Europea.