Cross-Lingual Adversarial Domain Adaptation for Novice Programming

Ye Mao, Farzaneh Khoshnevisan, Thomas Price, Tiffany Barnes, Min Chi

[AAAI-22] Main Track

Abstract:

Student modeling sits at the epicenter of adaptive learning technology. In contrast to the voluminous work on student modeling for well-defined domains such as algebra, there has been little research on student modeling in programming (SMP). As part of developing adaptive learning environments for programming, we are focusing on two essential SMP tasks: program classification and early prediction of student success. Due to the unbounded solution spaces for open-ended programming exercises, researchers often face the issue of data scarcity in SMP. In this work, we propose a Cross-Lingual Adversarial Domain Adaptation (CrossLing) framework that can leverage a large programming dataset to learn features that can improve SMP's build using a much smaller dataset in a different programming language. Our framework maintains one globally invariant latent representation across both datasets via an adversarial learning process, as well as allocating domain-specific models for each dataset to extract local latent representations that cannot and should not be united. By separating globally-shared representations from domain-specific representations, our framework outperforms existing state-of-the-art methods for both SMP tasks.

Introduction Video

Sessions where this paper appears

-

Poster Session 5

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

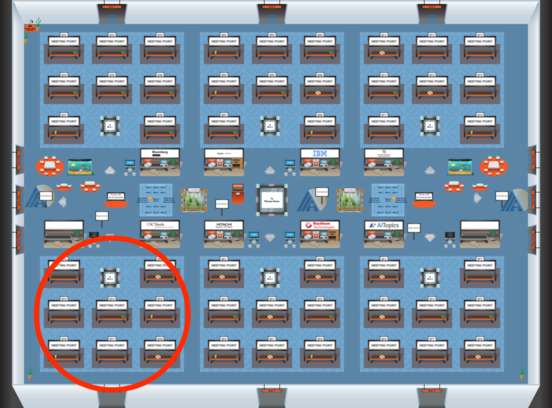

Blue 4

Blue 4

-

Poster Session 7

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Blue 4

Blue 4

-

Oral Session 5

Sat, February 26 2:30 AM - 3:45 AM (+00:00)

Sat, February 26 2:30 AM - 3:45 AM (+00:00)

Blue 4

Blue 4