CTIN: Robust Contextual Transformer Network for Inertial Navigation

Bingbing Rao, Ehsan Kazemi, Yifan Ding, Devu M Shila, Frank M. Tucker, Liqiang Wang

[AAAI-22] Main Track

Abstract:

Recently, data-driven inertial navigation approaches have demonstrated their capability of using well-trained neural networks to obtain accurate position estimates from inertial measurement units (IMUs) measurements. In this paper, we propose a novel robust Contextual Transformer-based network for Inertial Navigation (CTIN) to accurately predict velocity and trajectory. To this end, we first design a ResNet-based encoder enhanced by local and global multi-head self-attention to capture spatial contextual information from IMU measurements. Then we fuse these spatial representations with temporal knowledge by leveraging multi-head attention in the Transformer decoder. Finally, multi-task learning with uncertainty reduction is leveraged to improve learning efficiency and prediction accuracy of velocity and trajectory. Through extensive experiments over a wide range of inertial datasets (e.g., RIDI, OxIOD, RoNIN, IDOL, and our own), CTIN is very robust and outperforms state-of-the-art models.

Introduction Video

Sessions where this paper appears

-

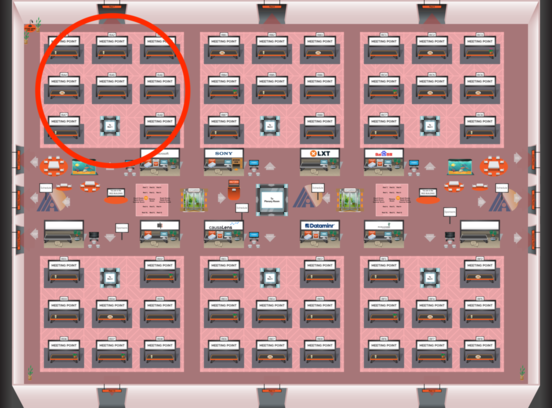

Poster Session 4

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

Red 1

Red 1

-

Poster Session 7

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Red 1

Red 1

-

Oral Session 4

Fri, February 25 6:45 PM - 8:00 PM (+00:00)

Fri, February 25 6:45 PM - 8:00 PM (+00:00)

Red 1

Red 1