Learning Mixture of Domain-Specific Experts via Disentangled Factors for Autonomous Driving

Inhan Kim, Joonyeong Lee, Daijin Kim

[AAAI-22] Main Track

Abstract:

Since people know the important factors that affect the control based on the driving situation, they can drive safely even in diverse driving environment. To mimic these behaviors, we propose a two-stage representation learning model that initially splits the latent features as domain-specific features, which contain information in a specific domain, and domain-general features, which are consistent across all domains. Subsequently, the dynamic-object features, which contain information of dynamic objects, are disentangled from latent features using mutual information estimator. In this study, the problem in behavior cloning is divided into several domain-specific subspaces, with experts becoming specialized on each domain-specific policy. The proposed mixture of domain-specific experts (MoDE) model predicts the final control values through the cooperation of experts using a gating function. The domain-specific features are used to calculate the importance weight of the domain-specific experts, and the disentangled domain-general and dynamic-object features are applied in estimating the control values. To validate the proposed MoDE model, we conducted several experiments and achieved a higher success rate on the CARLA benchmarks under several conditions and tasks than state-of-the-art approaches.

Introduction Video

Sessions where this paper appears

-

Poster Session 6

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

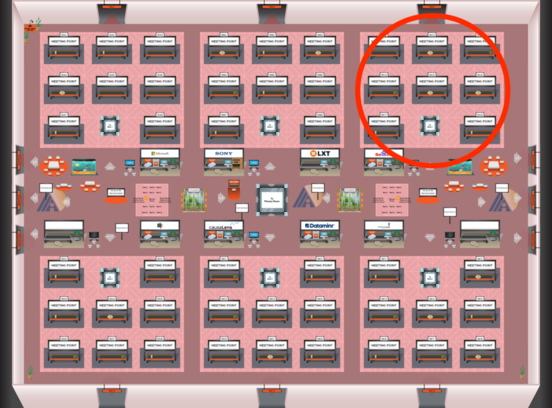

Red 3

Red 3

-

Poster Session 10

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Red 3

Red 3