Backprop-Free Reinforcement Learning with Active Neural Generative Coding

Alexander G. Ororbia, Ankur Mali

[AAAI-22] Main Track

Abstract:

In humans, perceptual awareness facilitates the fast recognition and extraction of information from sensory input. This awareness largely depends on how the human agent interacts with the environment. In this work, we propose active neural generative coding, a computational framework for learning action-driven generative models without backpropagation of errors (backprop) in dynamic environments. Specifically, we develop an intelligent agent that operates even with sparse rewards, drawing inspiration from the cognitive theory of planning as inference. We demonstrate on several simple control problems that our framework performs competitively with deep Q-learning. The robust performance of our agent offers promising evidence that a backprop-free approach for neural inference and learning can drive goal-directed behavior.

Introduction Video

Sessions where this paper appears

-

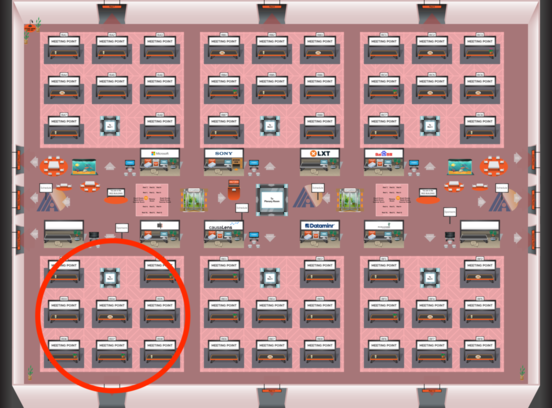

Poster Session 4

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

Red 4

Red 4

-

Poster Session 11

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Red 4

Red 4