Abstract:

This work introduces Fractional Activation Linear Units (FALUs), a flexible generalization of adaptive activation functions. Leveraging principles from fractional calculus, FALUs define a diverse family of activation functions that encompass many traditional and state-of-the-art activation functions, including the Sigmoid, ReLU, Swish, GELU, and Gaussian functions, as well as a large variety of smooth interpolations between these functions. Our technique introduces only a small number of additional trainable parameters, and requires no additional specialized optimization or initialization procedures. For this reason, FALUs present a seamless and rich automated solution to the problem of activation function optimization. Through experiments on a variety of conventional tasks and network architectures, we demonstrate the effectiveness of FALUs when compared to traditional and state-of-the-art activation functions.

Introduction Video

Sessions where this paper appears

-

Poster Session 1

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

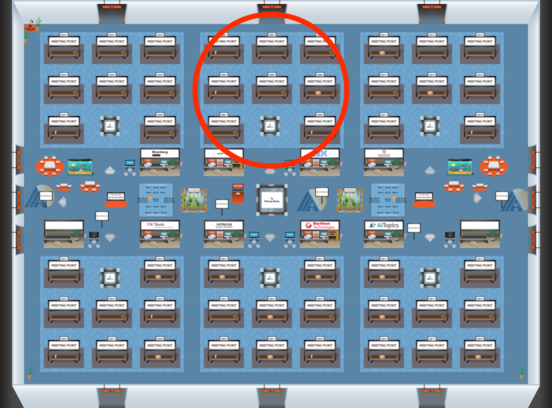

Blue 2

Blue 2

-

Poster Session 11

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Blue 2

Blue 2