Differential Assessment of Black-Box AI Agents

Rashmeet Kaur Nayyar, Pulkit Verma, Siddharth Srivastava

[AAAI-22] Main Track

Abstract:

Much of the research on learning symbolic models of AI agents focuses on agents with stationary models. This assumption fails to hold in settings where the agent’s capabilities may change as a result of learning, adaptation, or other post-deployment modifications. Efficient assessment of agents in such settings is critical for learning the true capabilities of an AI system and for ensuring its safe usage. In this work, we propose a novel approach to differentially assess black-box AI agents that have drifted from their prior known models. As a starting point, we consider the fully observable and deterministic setting. We leverage observations of the agent’s current behavior and knowledge of the initial model to generate an active querying policy that selectively queries the agent and computes an updated model of its functionality. Empirical evaluation shows that our approach is much more efficient than re-learning the agent model from scratch. We also show that the cost of differential assessment using our method is proportional to the amount of drift in the agent’s functionality.

Introduction Video

Sessions where this paper appears

-

Poster Session 1

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

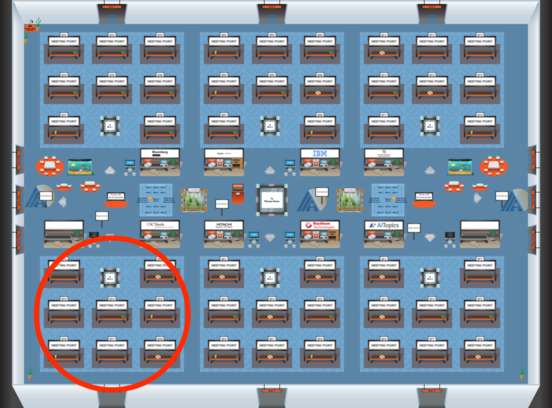

Blue 4

Blue 4

-

Poster Session 11

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Blue 4

Blue 4

-

Oral Session 1

Thu, February 24 6:30 PM - 7:45 PM (+00:00)

Thu, February 24 6:30 PM - 7:45 PM (+00:00)

Blue 4

Blue 4