FFNet: Frequency Fusion Network for Semantic Scene Completion

Xuzhi Wang, Di Lin, Liang Wan

[AAAI-22] Main Track

Abstract:

Semantic scene completion (SSC) aims at predicting the semantic categories and 3D volumetric occupancies of a scene simultaneously. The RGB-D images have been broadly used for providing the semantic and geometry information of the objects in existing semantic scene completion methods. However, existing works use concatenation, element-wise summation and weighted summation to fuse RGB-D data. These strategies ignore the large discrepancy of RGB-D data and the uncertainty measurements of depth data. To solve this problem, we propose the \emph{Frequency Fusion Network} (FFNet), a novel method boosting semantic scene completion by better utilizing RGB-D data. It first explicitly correlates the RGB-D data in the frequency domain, which is different from the features directly extracted by the convolution operation. Then, the correlation information is used to guide the RGB-assisted depth features and depth-assisted RGB features. Further, considering the properties of different frequency components of RGB-D features, we propose a learnable elliptical mask to decompose the features, and attend to different frequency bands to facilitate the correlation process of RGB-D data. We evaluate FFNet intensively on the public SSC benchmarks, where FFNet surpasses the state-of-the-art methods. Our code will be made publicly available.

Introduction Video

Sessions where this paper appears

-

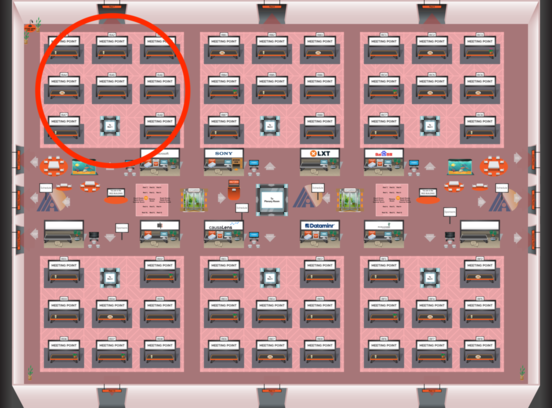

Poster Session 1

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

Red 1

Red 1

-

Poster Session 11

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Red 1

Red 1