Abstract:

Tensor optimization is crucial to massive machine learning and signal processing tasks. In this paper, we consider tensor optimization with a convex and well-conditioned objective function and reformulate it into a nonconvex optimization using the Burer-Monteiro type parameterization. We analyze the local convergence of applying vanilla gradient descent to the factored formulation and establish a local regularity condition under mild assumptions. We also provide a linear convergence analysis of the gradient descent algorithm started in a neighborhood of the true tensor factors. Complementary to the local analysis, this work also characterizes the global geometry of the best rank-one tensor approximation problem and demonstrates that for orthogonally decomposable tensors the problem has no spurious local minima and all saddle points are strict except for the one at zero which is a third-order saddle point.

Introduction Video

Sessions where this paper appears

-

Poster Session 4

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

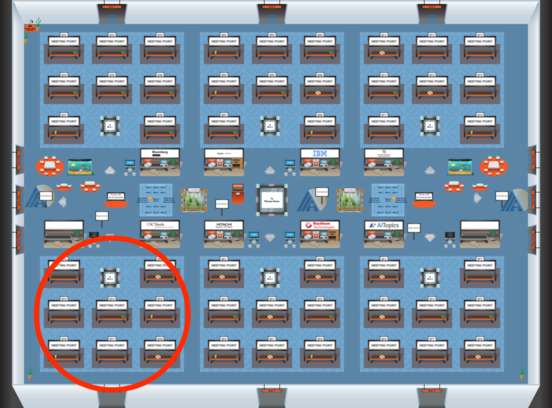

Blue 4

Blue 4

-

Poster Session 8

Sun, February 27 12:45 AM - 2:30 AM (+00:00)

Sun, February 27 12:45 AM - 2:30 AM (+00:00)

Blue 4

Blue 4