Visual Consensus Modeling for Video-Text Retrieval

Shuqiang Cao, Bairui Wang, Wei Zhang, Lin Ma

[AAAI-22] Main Track

Abstract:

In this paper, we propose a novel method to mine the commonsense knowledge shared between the video and text modalities for video-text retrieval, namely visual consensus modeling. Different from the existing works, which learn the video and text representations and their complicated relationships solely based on the pairwise video-text data, we make the first attempt to model the visual consensus by mining the visual concepts from videos and exploiting their co-occurrence patterns within the video and text modalities with no reliance on any additional concept annotations. Specifically, we build a shareable and learnable graph as the visual consensus, where the nodes denoting the mined visual concepts and the edges connecting the nodes representing the co-occurrence relationships between the visual concepts. Extensive experimental results on the public benchmark datasets demonstrate that our proposed method, with the ability to effectively model the visual consensus, achieves the state-of-the-art performances on the bidirectional video-text retrieval task. Our code is available at https://github.com/sqiangcao99/VCM.

Introduction Video

Sessions where this paper appears

-

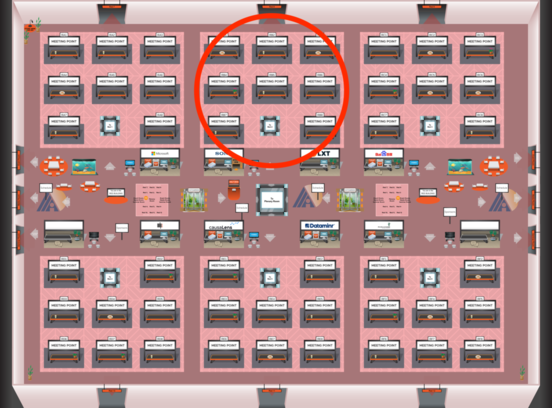

Poster Session 4

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

Red 2

Red 2

-

Poster Session 11

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Red 2

Red 2