Equilibrium Finding in Matrix Games Via Greedy Regret Minimization

Hugh Zhang, Adam Lerer, Noam Brown

[AAAI-22] Main Track

Abstract:

We extend the classic regret minimization framework for approximating equilibria in matrix games by greedily weighing iterates based on the regrets observed at runtime. Theoretically, our method retains all previous convergence rate guarantees. Empirically, experiments on large random matrix games and normal-form subgames of the AI benchmark Diplomacy show that greedy weights outperforms previous methods whenever sampling is used, sometimes by several orders of magnitude.

Introduction Video

Sessions where this paper appears

-

Poster Session 5

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

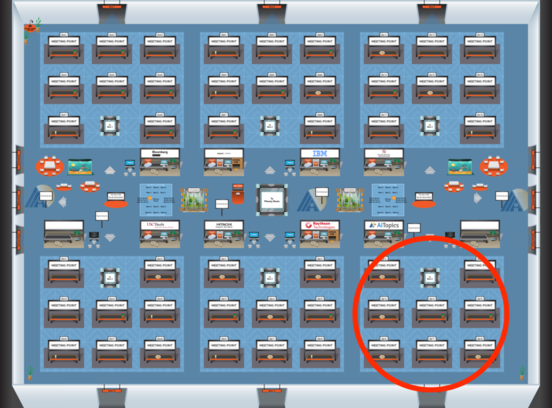

Blue 6

Blue 6

-

Poster Session 8

Sun, February 27 12:45 AM - 2:30 AM (+00:00)

Sun, February 27 12:45 AM - 2:30 AM (+00:00)

Blue 6

Blue 6