Why Fair Labels Can Yield Unfair Predictions: Graphical Conditions for Introduced Unfairness

Carolyn Ashurst, Ryan Carey, Silvia Chiappa, Tom Everitt

[AAAI-22] Main Track

Abstract:

In addition to reproducing discriminatory relationships in the training data, machine learning (ML) systems can also introduce or amplify discriminatory effects. We refer to this as introduced unfairness, and investigate the conditions under which it may arise. To this end, we propose introduced total variation as a measure of introduced unfairness, and establish graphical conditions under which it may be incentivised to occur. These criteria imply that adding the sensitive attribute as a feature removes the incentive for introduced variation under well-behaved loss functions. Additionally, taking a causal perspective, introduced path-specific effects shed light on the issue of when specific paths should be considered fair.

Introduction Video

Sessions where this paper appears

-

Poster Session 2

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

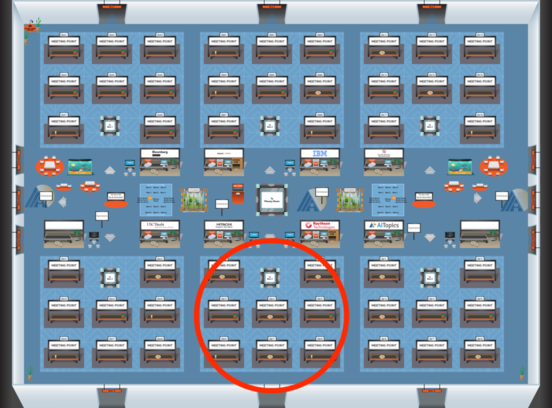

Blue 5

Blue 5

-

Poster Session 10

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Blue 5

Blue 5

-

Oral Session 10

Sun, February 27 6:30 PM - 7:45 PM (+00:00)

Sun, February 27 6:30 PM - 7:45 PM (+00:00)

Blue 5

Blue 5