iDECODe: In-Distribution Equivariance for Conformal Out-of-Distribution Detection

Ramneet Kaur, Susmit Jha, Anirban Roy, Sangdon Park, Edgar Dobriban, Oleg Sokolsky, Insup Lee

[AAAI-22] Main Track

Abstract:

Machine learning methods such as deep neural networks

(DNNs), despite their success across different domains, are

known to often generate incorrect predictions with high confi-

dence on inputs outside their training distribution. The deploy-

ment of DNNs in safety-critical domains requires detection of

out-of-distribution (OOD) data so that DNNs can abstain from

making predictions on those. A number of methods have been

recently developed for OOD detection, but there is still room

for improvement. We propose the new method iDECODe,

leveraging in-distribution equivariance for conformal OOD

detection. It relies on a novel base non-conformity measure

and a new aggregation method, used in the inductive confor-

mal anomaly detection framework, thereby guaranteeing a

bounded false detection rate. We demonstrate the efficacy of

iDECODe by experiments on image and audio datasets, obtain-

ing state-of-the-art results. We also show that iDECODe can

detect adversarial examples. Code, pre-trained models, and

data are available at https://github.com/ramneetk/iDECODe.

(DNNs), despite their success across different domains, are

known to often generate incorrect predictions with high confi-

dence on inputs outside their training distribution. The deploy-

ment of DNNs in safety-critical domains requires detection of

out-of-distribution (OOD) data so that DNNs can abstain from

making predictions on those. A number of methods have been

recently developed for OOD detection, but there is still room

for improvement. We propose the new method iDECODe,

leveraging in-distribution equivariance for conformal OOD

detection. It relies on a novel base non-conformity measure

and a new aggregation method, used in the inductive confor-

mal anomaly detection framework, thereby guaranteeing a

bounded false detection rate. We demonstrate the efficacy of

iDECODe by experiments on image and audio datasets, obtain-

ing state-of-the-art results. We also show that iDECODe can

detect adversarial examples. Code, pre-trained models, and

data are available at https://github.com/ramneetk/iDECODe.

Introduction Video

Sessions where this paper appears

-

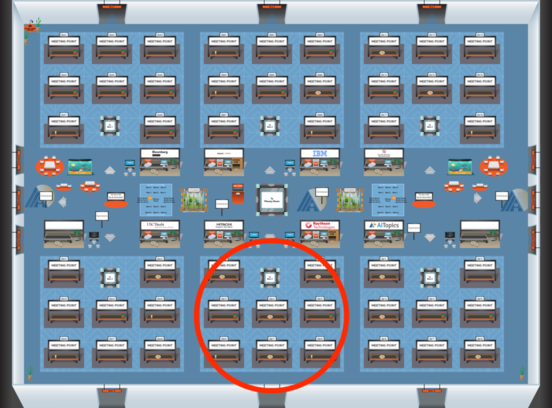

Poster Session 5

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Blue 5

Blue 5

-

Poster Session 9

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Blue 5

Blue 5