Energy-Based Generative Cooperative Saliency Prediction

Jing Zhang, Jianwen Xie, Zilong Zheng, Nick Barnes

[AAAI-22] Main Track

Abstract:

Conventional saliency prediction models typically learn a deterministic mapping from images to the corresponding ground truth saliency maps. In this paper, we study the saliency prediction problem from the perspective of generative models by learning a conditional probability distribution over saliency maps given an image, and treating the saliency prediction as a sampling process. Specifically, we propose a generative cooperative saliency prediction framework, where a conditional latent variable model and a conditional energy-based model are jointly trained to predict saliency in a cooperative manner. The latent variable model serves as a fast but coarse predictor to efficiently produce an initial prediction, which is then refined by the iterative Langevin revision of the energy-based model that serves as a fine predictor. Such a coarse-to-fine cooperative saliency prediction strategy offers the best of both worlds. We further generalize our framework to weakly supervised saliency prediction with a cooperative learning while recovering strategy. Experimental results show that our generative model achieves both state-of-the-art performance and reliable sampling processing exploring.

Introduction Video

Sessions where this paper appears

-

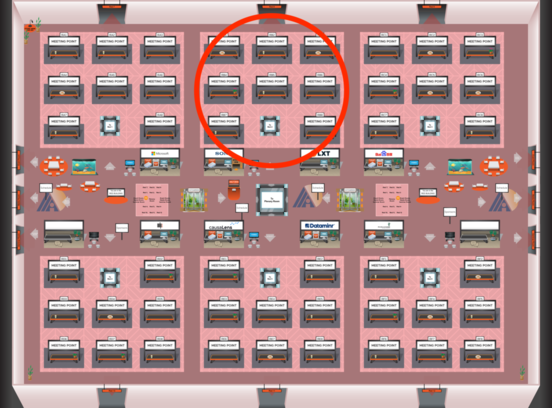

Poster Session 2

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

Red 2

Red 2

-

Poster Session 9

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Red 2

Red 2

-

Oral Session 9

Sun, February 27 10:30 AM - 11:45 AM (+00:00)

Sun, February 27 10:30 AM - 11:45 AM (+00:00)

Red 2

Red 2