Braid: Weaving Symbolic and Neural Knowledge into Coherent Logical Explanations

Aditya Kalyanpur, Tom Breloff, David A Ferrucci

[AAAI-22] Main Track

Abstract:

Traditional symbolic reasoning engines, while attractive for their precision and explicability, have a few major drawbacks: the use of brittle inference procedures that rely on exact matching (unification) of logical terms, an inability to deal with uncertainty, and the need for a precompiled rule-base of knowledge (the “knowledge acquisition” problem). To address these issues, we devise a novel logical reasoner called Braid, that supports probabilistic rules, and uses the notion of custom unification functions and dynamic rule generation to overcome the brittle matching and knowledge-gap problem prevalent in traditional reasoners. In this paper, we describe the reasoning algorithms used in Braid, and their implementation in a distributed task-based framework that builds proof/explanation graphs for an input query. We use a simple QA example from a children’s story to motivate Braid’s design and explain how the various components work together to produce a coherent logical explanation. Finally, we evaluate Braid on the ROC Story Cloze test and achieve close to state-of-the-art results while providing frame-based explanations.

Introduction Video

Sessions where this paper appears

-

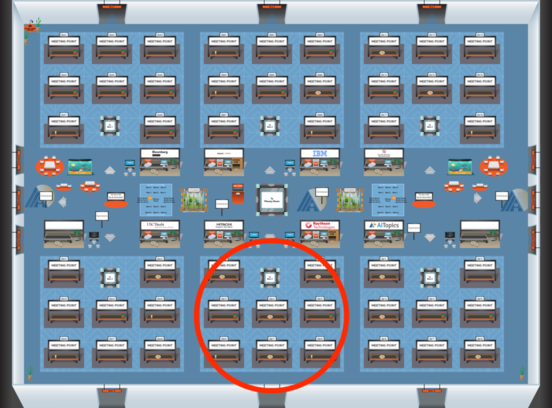

Poster Session 3

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Blue 5

Blue 5

-

Poster Session 7

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Blue 5

Blue 5