Delving into the local: Dynamic Inconsistency Learning for DeepFake Video Detection

Zhihao Gu, Yang Chen, Taiping Yao, Shouhong Ding, Jilin Li, Lizhuang Ma

[AAAI-22] Main Track

Abstract:

The rapid development of facial manipulation techniques has aroused public concerns in recent years. Existing deepfake video detection approaches attempt to capture the discriminative features between real and fake faces based on temporal modelling. However, these works impose supervisions on sparsely sampled video frames but overlook the local motions among adjacent frames, which instead encode rich inconsistency information that can serve as an effcient indicator for DeepFake video detection. To mitigate this issue, we delves into the local motion and propose a novel sampling unit named $snippet$ which contains a few successive videos frames for local temporal inconsistency learning. Moreover, we elaborately design an Intra-Snippet Inconsistency Module (Intra-SIM) and an Inter-Snippet Interaction Module (Inter-SIM) to establish a dynamic inconsistency modelling framework. Specifically, the Intra-SIM applies bi-directional temporal difference operations and a learnable convolution kernel to mine the short-term motions within each snippet. The Inter-SIM is then devised to promote the cross-snippet information interaction to form global representations. The Intra-SIM and Inter-SIM work in an alternate manner and can be plugged into existing 2D CNNs. Our method outperforms the state-of-the-art competitors on four popular benchmark dataset, \emph{i.e.,} FaceForensics++, Celeb-DF, DFDC and WildDeepfake. Besides, extensive experiments and visualizations are also presented to further illustrate its effectiveness.

Introduction Video

Sessions where this paper appears

-

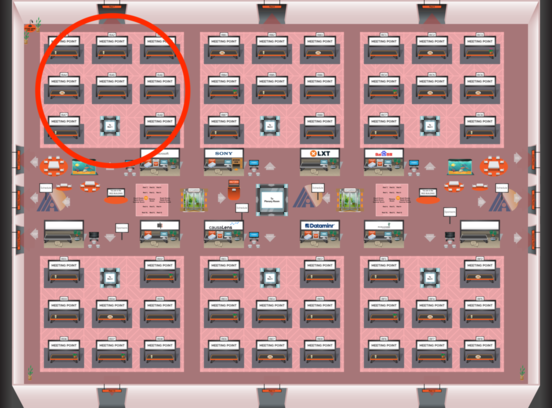

Poster Session 2

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

Red 1

Red 1

-

Poster Session 12

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Red 1

Red 1