Towards Accurate Facial Motion Retargeting with Identity-Consistent and Expression-Exclusive Constraints

Langyuan Mo, Haokun Li, Chaoyang Zou, Yubing Zhang, Ming Yang, Yihong Yang, Mingkui Tan

[AAAI-22] Main Track

Abstract:

We address the problem of facial motion retargeting that aims to transfer facial motion from a 2D face image to 3D characters. Existing methods often formulate this problem as a 3D face reconstruction problem, which estimates the face attributes such as face identity and expression from face images. However, due to the lack of ground-truth labels for both identity and expression, most 3D-face reconstruction-based methods fail to capture the facial identity and expression accurately. As a result, these methods may not achieve promising performance. To address this, we propose an identity-consistent constraint to learn accurate identities by encouraging consistent identity prediction across multiple frames. Based on a more accurate identity, we are able to obtain a more accurate facial expression. Moreover, we further propose an expression-exclusive constraint to improve performance by avoiding the co-occurrence of contradictory expression units (e.g., ``brow lower'' vs. ``brow raise''). Extensive experiments on facial motion retargeting and 3D face reconstruction tasks demonstrate the superiority of the proposed method over existing methods.

Introduction Video

Sessions where this paper appears

-

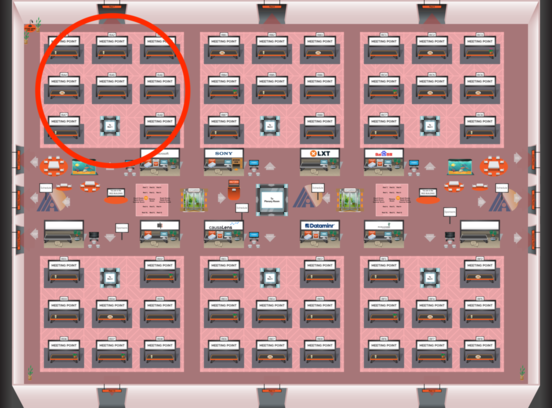

Poster Session 5

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Red 1

Red 1

-

Poster Session 9

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Red 1

Red 1

-

Oral Session 9

Sun, February 27 10:30 AM - 11:45 AM (+00:00)

Sun, February 27 10:30 AM - 11:45 AM (+00:00)

Red 1

Red 1