Negative Sample Matters: A Renaissance of Metric Learning for Temporal Grounding

Zhenzhi Wang, Limin Wang, Tao Wu, Tianhao Li, Gangshan Wu

[AAAI-22] Main Track

Abstract:

Temporal grounding aims to localize a video moment which is semantically aligned with a given natural language query. Existing methods typically apply a detection or regression pipeline on the fused representation with the research focus on designing complicated prediction heads or fusion strategies. Instead, from a perspective on temporal grounding as a metric-learning problem, we present a Mutual Matching Network (MMN), to directly model the similarity between language queries and video moments in a joint embedding space. This new metric-learning framework enables fully exploiting negative samples from two new aspects: constructing negative cross-modal pairs in a mutual matching scheme and mining negative pairs across different videos. These new negative samples could enhance the joint representation learning of two modalities via cross-modal mutual matching to maximize their mutual information. Experiments show that our MMN achieves highly competitive performance compared with the state-of-the-art methods on four video grounding benchmarks. Based on MMN, we present a winner solution for the HC-STVG challenge of the 3rd PIC workshop. This suggests that metric learning is still a promising method for temporal grounding via capturing the essential cross-modal correlation in a joint embedding space. Code is available at https://github.com/MCG-NJU/MMN.

Introduction Video

Sessions where this paper appears

-

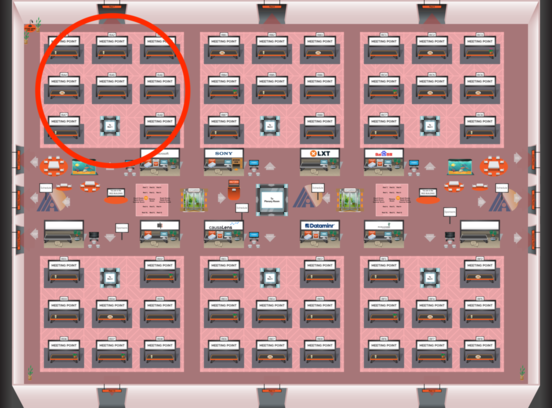

Poster Session 5

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Red 1

Red 1

-

Poster Session 9

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Red 1

Red 1