Combating Adversaries with Anti-Adversaries

Motasem Alfarra, Juan C. Pérez, Ali Thabet, Adel Bibi, Philip H.S. Torr, Bernard Ghanem

[AAAI-22] Main Track

Abstract:

Deep neural networks are vulnerable to small input perturbations known as adversarial attacks. Inspired by the fact that these adversaries are constructed by iteratively minimizing the confidence of a network for the true class label, we propose the anti-adversary layer, aimed at countering this effect. In particular, our layer generates an input perturbation in the opposite direction of the adversarial one and feeds the classifier a perturbed version of the input. Our approach is training-free and theoretically supported. We verify the effectiveness of our approach by combining our layer with both nominally and robustly trained models and conduct large-scale experiments from black-box to adaptive attacks on CIFAR10, CIFAR100, and ImageNet. Our layer significantly enhances model robustness while coming at no cost on clean accuracy.

Introduction Video

Sessions where this paper appears

-

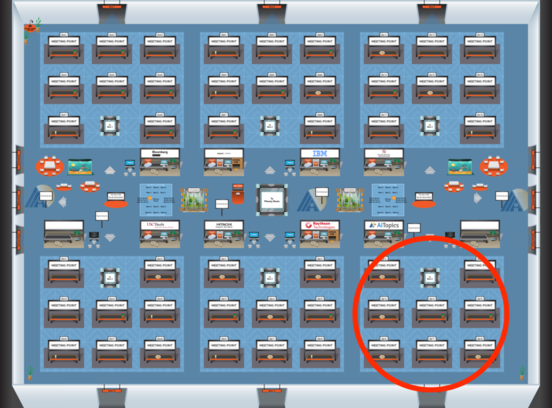

Poster Session 4

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

Blue 6

Blue 6

-

Poster Session 9

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Blue 6

Blue 6