Lifelong Hyper-Policy Optimization with Multiple Importance Sampling Regularization

Pierre Liotet, Francesco Vidaich, Alberto Maria Metelli, Marcello Restelli

[AAAI-22] Main Track

Abstract:

Learning in a lifelong setting, where the dynamics continually evolve, is a hard challenge for current reinforcement learning algorithms. Yet this would be a much needed feature for practical applications.

In this paper, we propose an approach which learns a hyper-policy, whose input is time, that outputs the parameters of the policy to be queried at that time.

This hyper-policy is trained to maximize the estimated future performance, efficiently reusing past data by means of importance sampling, at the cost of introducing a controlled bias. We combine the future performance estimate with the past performance to mitigate catastrophic forgetting.

To avoid overfitting the collected data, we derive a differentiable variance bound that we embed as a penalization term. Finally, we empirically validate our approach, in comparison with state-of-the-art algorithms, on realistic environments, including water resource management and trading.

In this paper, we propose an approach which learns a hyper-policy, whose input is time, that outputs the parameters of the policy to be queried at that time.

This hyper-policy is trained to maximize the estimated future performance, efficiently reusing past data by means of importance sampling, at the cost of introducing a controlled bias. We combine the future performance estimate with the past performance to mitigate catastrophic forgetting.

To avoid overfitting the collected data, we derive a differentiable variance bound that we embed as a penalization term. Finally, we empirically validate our approach, in comparison with state-of-the-art algorithms, on realistic environments, including water resource management and trading.

Introduction Video

Sessions where this paper appears

-

Poster Session 3

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

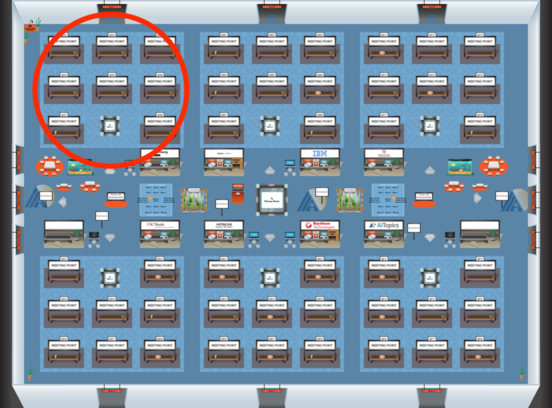

Blue 1

Blue 1

-

Poster Session 7

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Blue 1

Blue 1