Reinforcement Learning of Causal Variables Using Mediation Analysis

Tue Herlau, Rasmus Larsen

[AAAI-22] Main Track

Abstract:

We consider the problem of acquiring causal representations and concepts in a reinforcement learning setting.

Our approach defines a causal variable as being both manipulable by a policy, and able to predict the outcome.

We thereby obtain a parsimonious causal graph in which interventions occur at the level of policies.

The approach avoids defining a generative model of the data, prior pre-processing, or learning the transition kernel of the Markov decision process.

Instead, causal variables and policies are determined by maximizing a new optimization target inspired by mediation analysis, which differs from the expected return.

The maximization is accomplished using a generalization of Bellman's equation which is shown to converge, and the method finds meaningful causal representations in a simulated environment.

Our approach defines a causal variable as being both manipulable by a policy, and able to predict the outcome.

We thereby obtain a parsimonious causal graph in which interventions occur at the level of policies.

The approach avoids defining a generative model of the data, prior pre-processing, or learning the transition kernel of the Markov decision process.

Instead, causal variables and policies are determined by maximizing a new optimization target inspired by mediation analysis, which differs from the expected return.

The maximization is accomplished using a generalization of Bellman's equation which is shown to converge, and the method finds meaningful causal representations in a simulated environment.

Introduction Video

Sessions where this paper appears

-

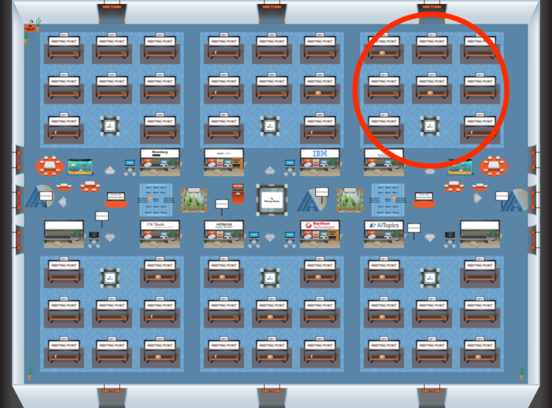

Poster Session 4

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

Blue 3

Blue 3

-

Poster Session 9

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Blue 3

Blue 3