Contrast-Enhanced Semi-Supervised Text Classification with Few Labels

Austin Cheng-Yun Tsai, Sheng-Ya Lin, Li-Chen Fu

[AAAI-22] Main Track

Abstract:

Traditional text classification requires thousands of annotated data or an additional Neural Machine Translation (NMT) system, which are expensive to obtain in real applications. This paper presents a Contrast-Enhanced Semi-supervised Text Classification (CEST) framework under label-limited settings without incorporating any NMT systems. We propose a certainty-driven sample selection method and a contrast-enhanced similarity graph to utilize data more efficiently in self-training, alleviating the annotation-starving problem. The graph imposes a smoothness constraint on the unlabeled data to improve the coherence and the accuracy of pseudo-labels. Moreover, CEST formulates the training as a “learning from noisy labels” problem and performs the optimization accordingly. A salient feature of this formulation is the explicit suppression of the severe error propagation problem in conventional semi-supervised learning. With solely 30 labeled data per class for both training and validation dataset, CEST outperforms the previous state-of-the-art algorithms by 2.11% accuracy and only falls within the 3.04% accuracy range of fully-supervised pre-training language model fine-tuning on thousands of labeled data.

Introduction Video

Sessions where this paper appears

-

Poster Session 3

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

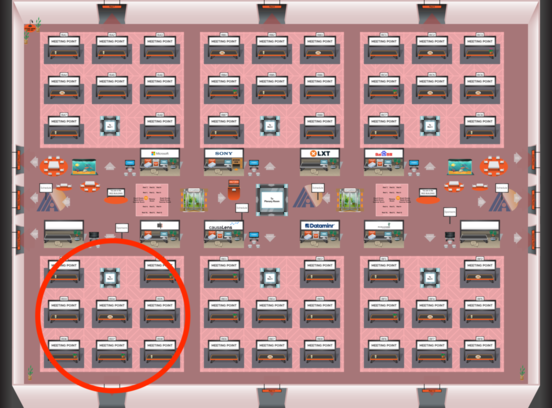

Red 4

Red 4

-

Poster Session 12

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Red 4

Red 4

-

Oral Session 3

Fri, February 25 10:30 AM - 11:45 AM (+00:00)

Fri, February 25 10:30 AM - 11:45 AM (+00:00)

Red 4

Red 4