P^3-Net: Part Mobility Parsing from Point Cloud Sequences via Learning Explicit Point Correspondence

Yahao Shi, Xinyu Cao, Feixiang Lu, Bin Zhou

[AAAI-22] Main Track

Abstract:

Understanding an articulated 3D object with its movable parts is an essential skill for an intelligent agent. This paper presents a novel approach to parse 3D part mobility from point cloud sequences. The key innovation is learning explicit point correspondence from a raw unordered point cloud sequence. We propose a novel deep network called P^3-Net to parallelize trajectory feature extraction and point correspondence establishment, performing joint optimization between them. Specifically, we design a Match-LSTM module to reaggregate point features among different frames by a point correspondence matrix, a.k.a. the matching matrix. To obtain this matrix, an attention module is proposed to calculate the point correspondence. Moreover, we implement a Gumbel-Sinkhorn module to reduce the many-to-one relationship for better point correspondence. We conduct comprehensive evaluations on public benchmarks, including the motion dataset and the PartNet dataset. Results demonstrate that our approach outperforms SOTA methods on various 3D parsing tasks of part mobility, including motion flow prediction, motion part segmentation, and motion attribute (i.e. axis & range) estimation. Moreover, we integrate our approach into a robot perception module to validate its robustness.

Introduction Video

Sessions where this paper appears

-

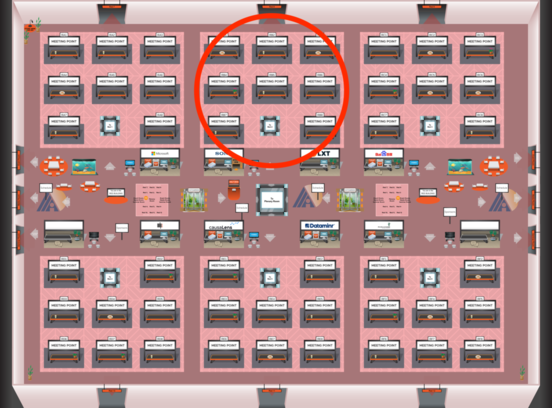

Poster Session 3

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Red 2

Red 2

-

Poster Session 8

Sun, February 27 12:45 AM - 2:30 AM (+00:00)

Sun, February 27 12:45 AM - 2:30 AM (+00:00)

Red 2

Red 2