Leashing the Inner Demons: Self-Detoxification for Language Models

Canwen Xu, Zexue He, Zhankui He, Julian McAuley

[AAAI-22] Main Track

Abstract:

Language models (LMs) can reproduce (or amplify) toxic language seen during training, which poses a risk to their practical application. In this paper, we conduct extensive experiments to study this phenomenon. We analyze the impact of prompts, decoding strategies and training corpora on the output toxicity. Based on our findings, we propose a simple yet effective unsupervised method for language models to ``detoxify'' themselves without an additional large corpus or external discriminator. Compared to a supervised baseline, our proposed method shows better toxicity reduction with good generation quality in the generated content under multiple settings.

Introduction Video

Sessions where this paper appears

-

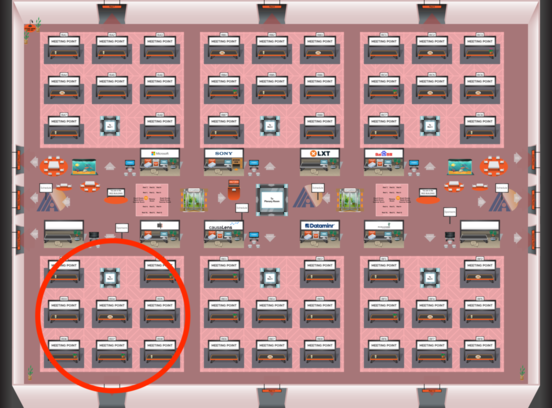

Poster Session 1

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

Red 4

Red 4

-

Poster Session 8

Sun, February 27 12:45 AM - 2:30 AM (+00:00)

Sun, February 27 12:45 AM - 2:30 AM (+00:00)

Red 4

Red 4