EtinyNet: Extremely Tiny Network for TinyML

Kunran Xu, Yishi Li, Huawei Zhang, Rui Lai, Lin Gu

[AAAI-22] Main Track

Abstract:

Running Convolutional Neural Networks (CNNs) on resource strictly restricted IoT devices, e.g. microcontroller units (MCU) and field programmable gate array (FPGA), is an important and challenging topic: Tiny machine learning (TinyML). To meet very limited storage and power requirements of IoT devices, it is essential to reduce the parameters of CNN models as much as possible. In this paper, we aim at developing an efficient tiny model with only hundreds of KB parameters. Toward this end, we firstly design a parameter-efficient tiny architecture by introducing dense linear depthwise block. Then, a novel adaptive scale quantization (ASQ) method is proposed for further quantizing tiny models in aggressive low-bit while retaining the accuracy. With the optimized architecture and 4-bit ASQ, we present a family of ultra-lightweight networks, named EtinyNet, that achieves 57.0% ImageNet top-1 accuracy with an extremely tiny model size of 340KB. When deployed on an off-the-shelf commercial microcontroller for object detection tasks, EtinyNet achieves state-of-the-art 56.4% mAP on Pascal VOC. Furthermore, the experimental results on Xilinx compact FPGA indicate that EtinyNet achieves prominent low power of 620mW, about 5.6x lower than existing FPGA designs.

Introduction Video

Sessions where this paper appears

-

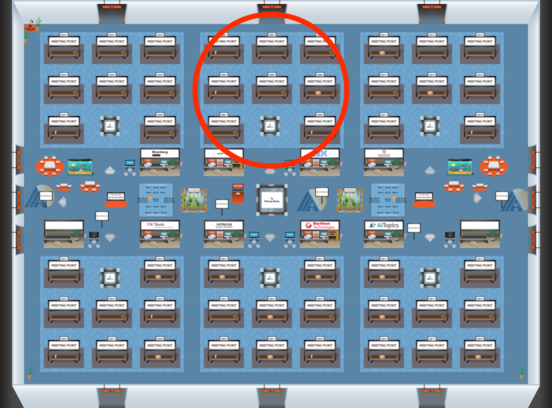

Poster Session 6

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Blue 2

Blue 2

-

Poster Session 12

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Blue 2

Blue 2