Zero Stability Well Predicts Performance of Convolutional Neural Networks

Liangming Chen, Long Jin, Mingsheng Shang

[AAAI-22] Main Track

Abstract:

The question of what kind of convolutional neural network (CNN) structure performs well is fascinating. In this work, we move toward the answer with one more step by connecting zero stability and model performance. Specifically, we found that if the discrete ordinary differential equation is zero stable, its corresponding CNN performs well. We first give the interpretation of zero stability in the context of deep learning and then investigate the performance of existing first- and second-order CNNs under different zero-stable circumstances. Based on the preliminary observation, we provide a higher-order discretization to construct CNNs and then propose a zero-stable network (ZeroSNet). To guarantee zero stability of the ZeroSNet, we first deduce a structure that meets consistency conditions and then give a zero stable region of a training-free parameter. By analyzing the roots of a characteristic equation, we theoretically obtain the optimal coefficients of feature maps. Empirically, we show our results from three aspects: We provide extensive empirical evidence of different widths on different datasets to show that the moduli of the characteristic equation's roots are the keys for the performance of CNNs that require historical features; Our experiments show that ZeroSNet outperforms existing CNNs which is based on high-order discretization; ZeroSNets show better robustness against noises on the input. The source code is available at https://github.com/logichen/ZeroSNet.

Introduction Video

Sessions where this paper appears

-

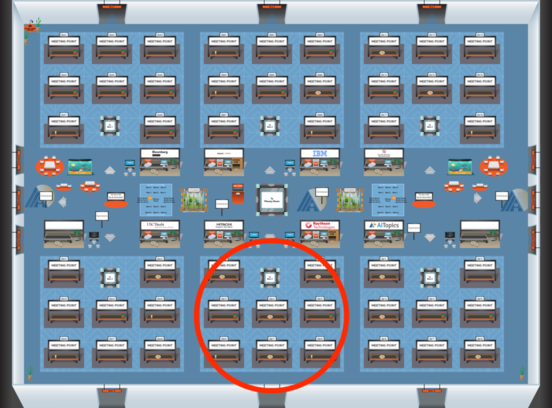

Poster Session 6

Blue 5

Blue 5

-

Poster Session 12

Blue 5

Blue 5