Balanced Self-Paced Learning for AUC Maximization

Chenkang Zhang, Bin Gu, Huan Xiong, Heng Huang

[AAAI-22] Main Track

Abstract:

Learning to improve AUC performance is an important topic in machine learning. However, AUC maximization algorithms may decrease generalization performance due to the noisy data. Self-paced learning is an effective method for handling noisy data. However, existing self-paced learning methods are limited to pointwise learning, while AUC maximization is a pairwise learning problem. To solve this challenging problem, we innovatively propose a balanced self-paced AUC maximization algorithm (BSPAUC). Specifically, we first provide a statistical objective for self-paced AUC.

Based on this, we propose our self-paced AUC maximization formulation, where a novel balanced self-paced regularization term is embedded to ensure that the selected positive and negative samples have proper proportions. Specially, the sub-problem with respect to all weight variables may be non-convex in our formulation, while the one is normally convex in existing self-paced problems. To address this, we propose a doubly cyclic block coordinate descent method.

More importantly, we prove that the sub-problem with respect to all weight variables converges to a stationary point on the basis of closed-form solutions, and our BSPAUC converges to a stationary point of our fixed optimization objective under a mild assumption. Considering both the deep learning and kernel-based implementations, experimental results on several large-scale datasets demonstrate that our BSPAUC has a better generalization performance than existing state-of-the-art AUC maximization methods.

Based on this, we propose our self-paced AUC maximization formulation, where a novel balanced self-paced regularization term is embedded to ensure that the selected positive and negative samples have proper proportions. Specially, the sub-problem with respect to all weight variables may be non-convex in our formulation, while the one is normally convex in existing self-paced problems. To address this, we propose a doubly cyclic block coordinate descent method.

More importantly, we prove that the sub-problem with respect to all weight variables converges to a stationary point on the basis of closed-form solutions, and our BSPAUC converges to a stationary point of our fixed optimization objective under a mild assumption. Considering both the deep learning and kernel-based implementations, experimental results on several large-scale datasets demonstrate that our BSPAUC has a better generalization performance than existing state-of-the-art AUC maximization methods.

Introduction Video

Sessions where this paper appears

-

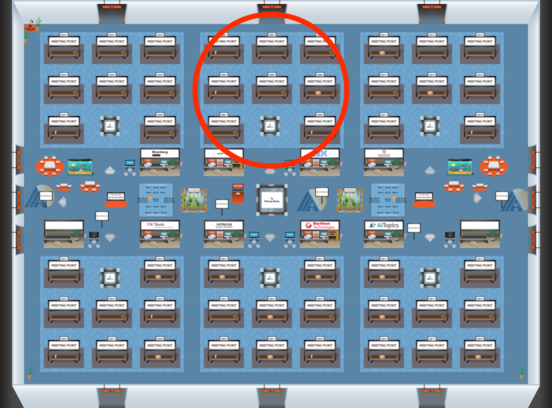

Poster Session 2

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

Blue 2

Blue 2

-

Poster Session 9

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Blue 2

Blue 2