InsCLR: Improving Instance Retrieval with Self-Supervision

Zelu Deng, Yujie Zhong*, Sheng Guo, Weilin Huang

[AAAI-22] Main Track

Abstract:

This work aims at improving instance retrieval with self-supervision. We find that fine-tuning using the recently developed self-supervised (SSL) learning methods, such as SimCLR and MoCo, fails to improve the performance of instance retrieval. In this work, we identify that the learnt representations for instance retrieval should be invariant to large variations in viewpoint and background etc., whereas self-augmented positives applied by the current SSL methods can not provide strong enough signals for learning robust instance-level representations. To overcome this problem, we propose InsCLR, a new SSL method that builds on the instance-level contrast, to learn the intra-class invariance by dynamically mining meaningful pseudo positive samples from both mini-batches and a memory bank during training. Extensive experiments demonstrate that InsCLR achieves similar or even better performance than the state-of-the-art SSL methods on instance retrieval. Code is available at https://github.com/zeludeng/insclr.

Introduction Video

Sessions where this paper appears

-

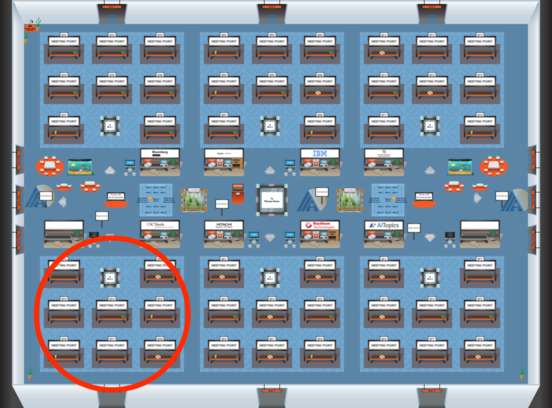

Poster Session 1

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

Blue 4

Blue 4

-

Poster Session 11

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Blue 4

Blue 4