Distillation of RL Policies with Formal Guarantees via Variational Abstraction of Markov Decision Processes

Florent Delgrange, Ann Nowé, Guillermo A. Pérez

[AAAI-22] Main Track

Abstract:

We consider the challenge of policy simplification and verification in the context of policies learned through reinforcement learning in continuous environments. In well-behaved settings, reinforcement learning (RL) algorithms have convergence guarantees in the limit. While these guarantees are valuable, they are insufficient for safety-critical applications. Furthermore, they are lost altogether when applying advanced techniques such as deep-RL. To recover guarantees when applying advanced RL algorithms to more complex environments with (i) reachability, (ii) safety-constrained reachability, or (iii) discounted-reward objectives, we build upon the DeepMDP framework introduced by Gelada et al. in order to derive new bisimulation bounds between the unknown environment and a learnt discrete latent model of it. Our bisimulation bounds enable the application of formal methods for Markov decision processes. Finally, we show how one can use a policy obtained via state-of-the-art RL to efficiently train a variational autoencoder that yields a discrete latent model with provably approximately correct bisimulation guarantees. Additionally, we obtain a distilled version of the policy for the latent model.

Introduction Video

Sessions where this paper appears

-

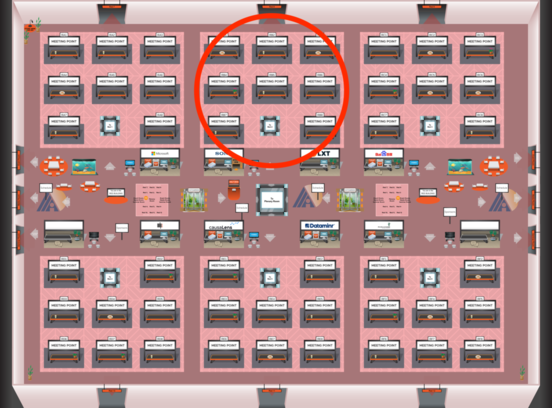

Poster Session 6

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Red 2

Red 2

-

Poster Session 7

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Red 2

Red 2