LogicDef: An Interpretable Defense Framework Against Adversarial Examples via Inductive Scene Graph Reasoning

Yuan Yang, James C Kerce, Faramarz Fekri

[AAAI-22] Main Track

Abstract:

Deep vision models are successfully employed in many applications. However, recent studies have shown that they are vulnerable to adversarial sample attacks. Existing adversarial defense methods are either limited to specific types of attacks or are too complex to be applied to practical vision models. More importantly, these methods rely on techniques that are not interpretable to humans. In this work, we argue that an effective defense method should produce the decision that explains why the system is attacked with a representation that is easily readable by a human user, e.g. a logic formalism. To this end, we propose logic adversarial defense (LogicDef), a defense framework that utilizes the scene graph of the image to provide a contextual structure for detecting and explaining object misclassification. Our framework first mines inductive logic rules from the graph that predict the objects, and then uses these rules to construct a defense model that alerts the users when the vision model violates the rules. The defense model is interpretable and its robustness can be further enhanced by incorporating commonsense knowledge from ConceptNet. Moreover, we propose a curriculum learning of the defense model based on object taxonomy which yields additional improvements in performance.

Introduction Video

Sessions where this paper appears

-

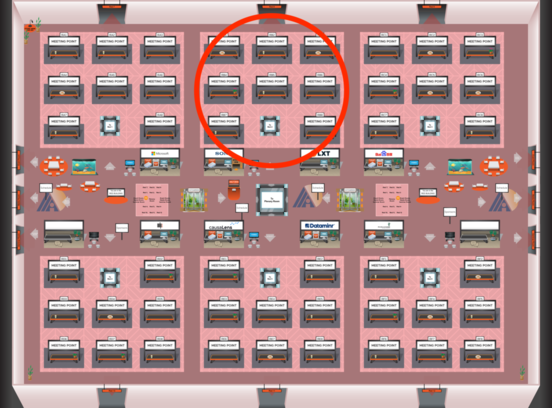

Poster Session 4

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

Red 2

Red 2

-

Poster Session 11

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Red 2

Red 2

-

Oral Session 4

Fri, February 25 6:45 PM - 8:00 PM (+00:00)

Fri, February 25 6:45 PM - 8:00 PM (+00:00)

Red 2

Red 2