Domain-Lifted Sampling for Universal Two-Variable Logic and Extensions

Yuanhong Wang, Timothy van Bremen, Yuyi Wang, Ondřej Kuželka

[AAAI-22] Main Track

Abstract:

Given a first-order sentence $\Gamma$ and a domain size $n$, how can one sample a model of $\Gamma$ on the domain $\{1, \dots, n\}$ efficiently as $n$ scales? We consider two variants of this problem: the \textit{uniform} sampling regime, in which the goal is to sample a model uniformly at random, and the \textit{symmetric weighted} sampling regime, in which models are weighted according to the number of groundings of each predicate appearing in them. Solutions to this problem have applications to the scalable generation of combinatorial structures, as well as sampling in several statistical-relational models such as Markov logic networks and probabilistic logic programs. In this paper, we identify certain classes of sentences that are \textit{domain-liftable under sampling}, in the sense that they admit a sampling algorithm that runs in time polynomial in $n$. In particular, we prove that every sentence of the form $\forall x \forall y : \psi(x,y)$ for some quantifier-free formula $\psi(x,y)$ is domain-liftable under sampling. We then further show that this result continues to hold in the presence of one or more \textit{cardinality constraints} as well as a single \textit{tree axiom} constraint.

Introduction Video

Sessions where this paper appears

-

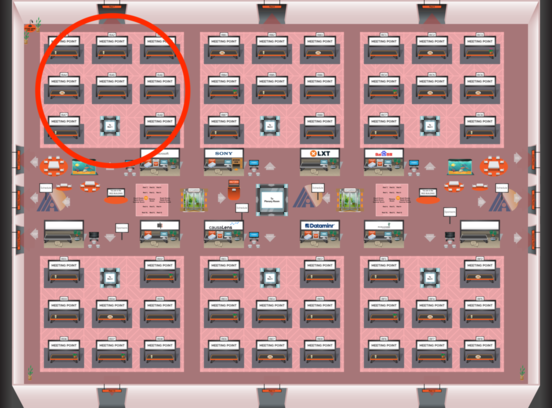

Poster Session 6

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Red 1

Red 1

-

Poster Session 10

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Red 1

Red 1