Controlling Underestimation Bias in Reinforcement Learning via Quasi-Median Operation

Wei Wei, Yujia Zhang, Jiye Liang, Lin Li, Yuze Li

[AAAI-22] Main Track

Abstract:

How to get a good value estimation is one of the key problems in reinforcement learning (RL). Current off-policy methods, such as Maxmin Q-learning, TD3 and TADD, suffer from the underestimation problem when solving the overestimation problem. In this paper, we propose the Quasi-Median Operation, a novel way to mitigate the underestimation bias by selecting the quasi-median from multiple state-action values. Based on the quasi-median operation, we propose Quasi-Median Q-learning (QMQ) for the discrete action tasks and Quasi-Median Delayed Deep Deterministic Policy Gradient (QMD3) for the continuous action tasks. Theoretically, the underestimation bias of our method is improved while the estimation variance is significantly reduced compared to Maxmin Q-learning, TD3 and TADD. We conduct extensive experiments on the discrete and continuous action tasks, and results show that our method outperforms the state-of-the-art methods.

Introduction Video

Sessions where this paper appears

-

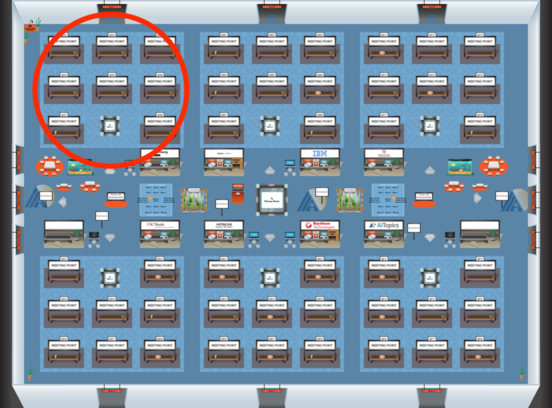

Poster Session 6

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Blue 1

Blue 1

-

Poster Session 12

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Blue 1

Blue 1