A Provably-Efficient Model-Free Algorithm for Infinite-Horizon Average-Reward Constrained Markov Decision Processes

Honghao Wei, Xin Liu, Lei Ying

[AAAI-22] Main Track

Abstract:

Model-free reinforcement learning (RL) algorithms are known to be memory and computation-efficient compared with model-based approaches. This paper presents a model-free RL algorithm for infinite-horizon average-reward Constrained Markov Decision Processes (CMDPs), which achieves sub-linear regret and zero constraint violation. To the best of our knowledge, this is the first {\em model-free} algorithm for general CMDPs in the infinite-horizon average-reward setting with provable guarantees.

Introduction Video

Sessions where this paper appears

-

Poster Session 1

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

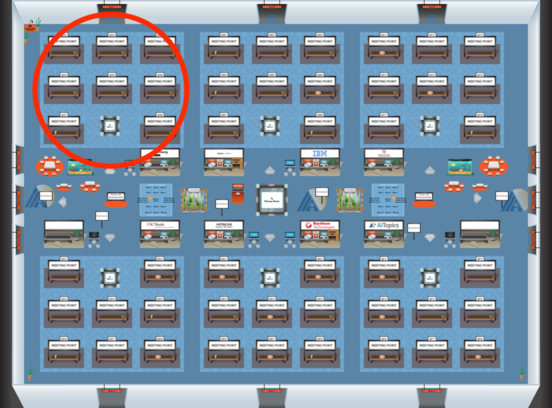

Blue 1

Blue 1

-

Poster Session 8

Sun, February 27 12:45 AM - 2:30 AM (+00:00)

Sun, February 27 12:45 AM - 2:30 AM (+00:00)

Blue 1

Blue 1