Conjugated Discrete Distributions for Distributional Reinforcement Learning

Björn Lindenberg, Jonas Nordqvist, Karl-Olof Lindahl

[AAAI-22] Main Track

Abstract:

In this work we continue to build upon recent advances in reinforcement learning for finite Markov processes. A common approach among previous existing algorithms, both single-actor and distributed, is to either clip rewards or to apply a transformation method on Q-functions to handle a large variety of magnitudes in real discounted returns. We theoretically show that one of the most successful methods may not yield an optimal policy if we have a non-deterministic process. As a solution, we argue that distributional reinforcement learning lends itself to remedy this situation completely. By the introduction of a conjugate distributional operator, we may handle a large class of transformations for real returns with guaranteed theoretical convergence. We propose an approximating single-actor algorithm based on this operator that trains agents directly on raw rewards using a proper distributional metric given by the Cramér distance. To evaluate its performance in a stochastic setting we train agents on a suite of 55 Atari 2600 games using sticky-actions and obtain state-of-the-art performance compared to other well-known algorithms in the Dopamine framework.

Introduction Video

Sessions where this paper appears

-

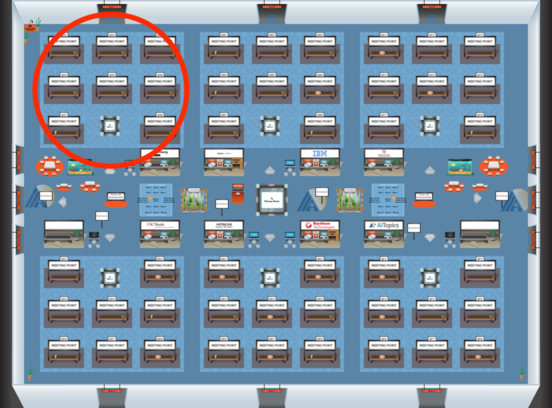

Poster Session 3

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Blue 1

Blue 1

-

Poster Session 7

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Blue 1

Blue 1