Weakly Supervised Neuro-Symbolic Module Networks for Numerical Reasoning over Text

Amrita Saha, Shafiq Joty, Steven Hoi

[AAAI-22] Main Track

Abstract:

Neural Module Networks (NMNs) have been quite successful in incorporating explicit reasoning as learnable modules in various question answering tasks, including the most generic form of numerical reasoning over text in Machine Reading Comprehension (MRC). However to achieve this, contemporary Neural Module Networks models obtain strong supervision in form of specialized program annotation from the QA pairs through various heuristic parsing and exhaustive computation of all possible discrete operations on discrete arguments. Consequently they fail to generalize to more open-ended settings without such supervision. Hence, we propose Weakly Supervised Neuro-Symbolic Module Network (WNSMN) trained with answers as the sole supervision for numerical reasoning based MRC. WNSMN learns to execute a noisy heuristic program obtained from the dependency parse of the query, as discrete actions over both neural and symbolic reasoning modules and trains it end-to-end in a reinforcement learning framework with discrete reward from answer matching. On the subset of DROP having numerical answers, WNSMN outperforms NMN by 32% and the reasoning-free generative language model GenBERT by 8% in exact match accuracy under comparable weakly supervised settings. This showcases the effectiveness of modular networks that can handle explicit discrete reasoning over noisy programs in an end-to-end manner.

Introduction Video

Sessions where this paper appears

-

Poster Session 3

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

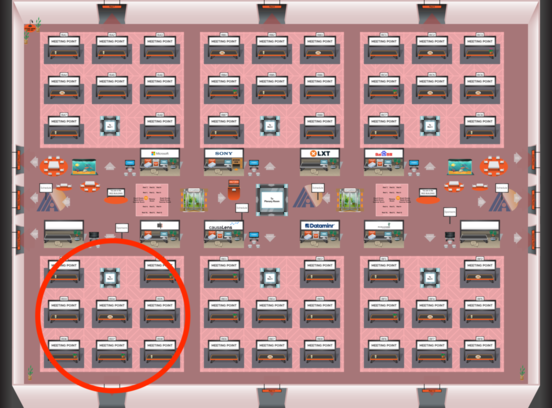

Red 4

Red 4

-

Poster Session 12

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Red 4

Red 4

-

Oral Session 12

Mon, February 28 10:30 AM - 11:45 AM (+00:00)

Mon, February 28 10:30 AM - 11:45 AM (+00:00)

Red 4

Red 4