Beyond Learning Features: Training a Fully-functional Classifier with ZERO Instance-level Labels

deepak babu sam, Abhinav Agarwalla, Venkatesh Babu RADHAKRISHNAN

[AAAI-22] Main Track

Abstract:

We attempt to train deep neural networks for classification without using any labeled data. Existing unsupervised methods, though mine useful clusters or features, require some annotated samples to facilitate the final task-specific predictions. This defeats the true purpose of unsupervised learning and hence we envisage a paradigm of `true' self-supervision, where absolutely no annotated instances are used for training a classifier. The proposed method first pretrains a deep network through self-supervision and performs clustering on the learned features. A classifier layer is then appended to the self-supervised network and is trained by matching the distribution of the predictions to that of a predefined prior. This approach leverages the distribution of labels for supervisory signals and consequently, no image-label pair is needed. Experiments reveal that the method works on major nominal as well as ordinal classification datasets and delivers significant performance.

Introduction Video

Sessions where this paper appears

-

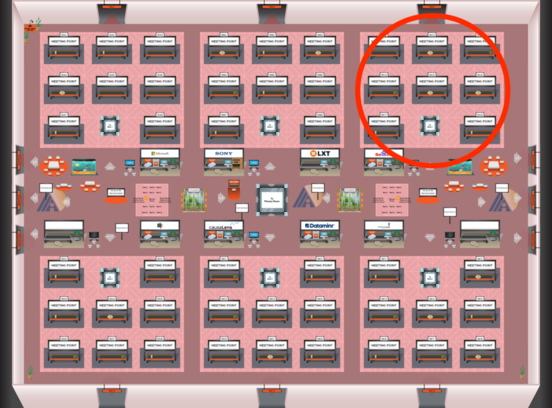

Poster Session 6

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Red 3

Red 3

-

Poster Session 10

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Red 3

Red 3