Learning Modular Structures That Generalize Out-of-Distribution (Student Abstract)

Arjun Ashok, Chaitanya Devaguptapu, Vineeth Balasubramanian

[AAAI-22] Student Abstract and Poster Program

Abstract:

Out-of-distribution (O.O.D.) generalization remains to be a key challenge for real-world machine learning systems. We describe a method for O.O.D. generalization that, through training, encourages models to only preserve features in the network that are well reused across multiple training domains. Our method combines two complementary neuron-level regularizers with a probabilistic differentiable binary mask over the network, to extract a modular sub-network that achieves better O.O.D. performance than the original network. Preliminary evaluation on two benchmark datasets corroborates the promise of our method.

Sessions where this paper appears

-

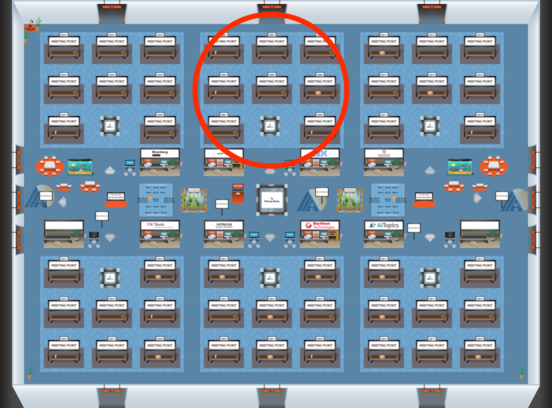

Poster Session 2

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

Blue 2

Blue 2

-

Poster Session 9

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Blue 2

Blue 2