Policy Optimization with Stochastic Mirror Descent

Long Yang, Yu Zhang, Gang Zheng, Qian Zheng, Pengfei Li, Jianhang Huang, Gang Pan

[AAAI-22] Main Track

Abstract:

Improving sample efficiency has been a longstanding goal in reinforcement learning.

This paper proposes the $\mathtt{VRMPO}$ algorithm: a sample efficient policy gradient method with stochastic mirror descent.

In $\mathtt{VRMPO}$, a novel variance-reduced policy gradient estimator is presented to improve sample efficiency.

Furthermore, we prove that our $\mathtt{VRMPO}$ needs only $\mathcal{O}(\epsilon^{-3})$ sample trajectories to achieve an $\epsilon$-approximate first-order stationary point,

which matches the best sample complexity.

The extensive experimental results demonstrate that our algorithm outperforms the state-of-the-art policy gradient methods in various settings.

This paper proposes the $\mathtt{VRMPO}$ algorithm: a sample efficient policy gradient method with stochastic mirror descent.

In $\mathtt{VRMPO}$, a novel variance-reduced policy gradient estimator is presented to improve sample efficiency.

Furthermore, we prove that our $\mathtt{VRMPO}$ needs only $\mathcal{O}(\epsilon^{-3})$ sample trajectories to achieve an $\epsilon$-approximate first-order stationary point,

which matches the best sample complexity.

The extensive experimental results demonstrate that our algorithm outperforms the state-of-the-art policy gradient methods in various settings.

Introduction Video

Sessions where this paper appears

-

Poster Session 6

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

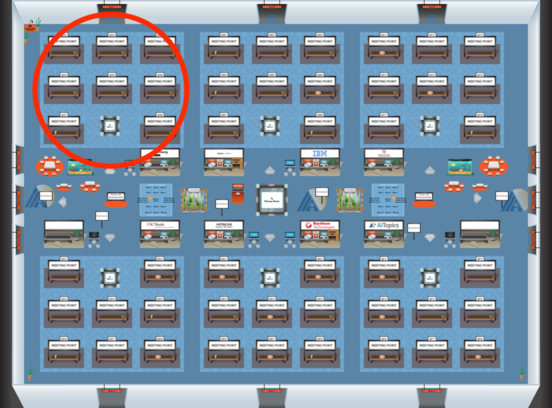

Blue 1

Blue 1

-

Poster Session 12

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Blue 1

Blue 1