Decompose the Sounds and Pixels, Recompose the Events

Varshanth R. Rao, Md Ibrahim Khalil, Haoda Li, Peng Dai, Juwei Lu

[AAAI-22] Main Track

Abstract:

In this paper, we propose a framework centering around a novel architecture called the Event Decomposition Recom-position Network (EDRNet) to tackle the Audio-Visual Event(AVE) localization problem in the supervised and weakly supervised settings. AVEs in the real world exhibit common unraveling patterns (termed asEvent Progress Checkpoints(EPC)), which humans can perceive through the cooperation of their auditory and visual senses. Unlike earlier methods which attempt to recognize entire event sequences, the EDR-Net models EPCs and inter-EPC relationships using stacked temporal convolutions. Based on the postulation that EPCrepresentations are theoretically consistent for an event category, we introduce the State Machine Based Video Fusion, a novel augmentation technique that blends source videos using different EPC template sequences. Additionally, we design a new loss function called the Land-Shore-Sea loss to compactify continuous foreground and background representations. Lastly, to alleviate the issue of confusing events during weak supervision, we propose a prediction stabilizationmethod called Bag to Instance Label Correction. Experimentson the AVE dataset show that our collective framework out-performs the state-of-the-art by a sizable margin.

Introduction Video

Sessions where this paper appears

-

Poster Session 3

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

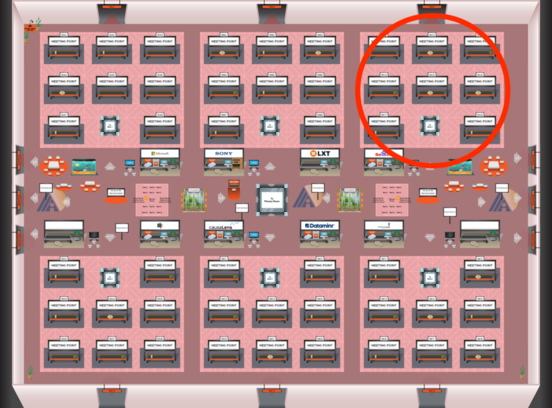

Red 3

Red 3

-

Poster Session 7

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Red 3

Red 3

-

Oral Session 7

Sat, February 26 6:30 PM - 7:45 PM (+00:00)

Sat, February 26 6:30 PM - 7:45 PM (+00:00)

Red 3

Red 3