An Evaluative Measure of Clustering Methods Incorporating Hyperparameter Sensitivity

Siddhartha Mishra, Nicholas Monath, Michael Boratko, Ariel Kobren, Andrew McCallum

[AAAI-22] Main Track

Abstract:

Clustering algorithms are often evaluated using metrics which compare with ground-truth cluster assignments, such as Rand index and NMI. Algorithm performance may vary widely for different hyperparameters, however, and thus model selection based on optimal performance for these metrics is discordant with how these algorithms are applied in practice, where labels are unavailable and tuning is often more art than science. It is therefore desirable to compare clustering algorithms not only on their optimally tuned performance, but also some notion of how realistic it would be to obtain this performance in practice. We propose an evaluation of clustering methods capturing this ease-of-tuning by modeling the expected best clustering score under a given computation budget. To encourage the adoption of the proposed metric alongside classic clustering evaluations, we provide an extensible benchmarking framework. We perform an extensive empirical evaluation of our proposed metric on popular clustering algorithms over a large collection of datasets from different domains, and observe that our new metric leads to several noteworthy observations.

Introduction Video

Sessions where this paper appears

-

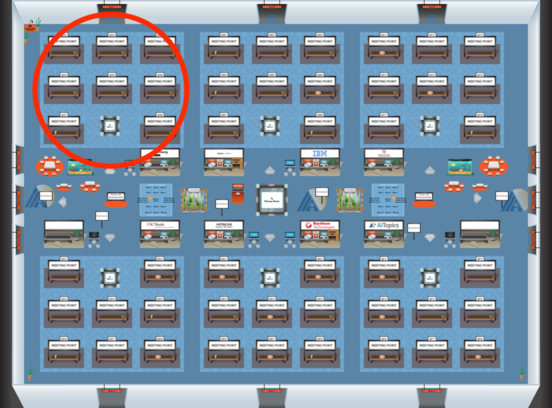

Poster Session 5

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Blue 1

Blue 1

-

Poster Session 10

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Blue 1

Blue 1