Automated Synthesis of Generalized Invariant Strategies via Counterexample-Guided Strategy Refinement

Kailun Luo, Yongmei Liu

[AAAI-22] Main Track

Abstract:

Strategy synthesis for multi-agent systems has proved to be a hard task, even when limited to two-player games with safety objectives. Generalized strategy synthesis, an extension of generalized planning which aims to produce a single solution for multiple (possibly infinitely many) planning instances, is a promising direction to deal with the state-space explosion problem. In this paper, we formalize the problem of generalized strategy synthesis in the situation calculus. The synthesis task involves second-order theorem proving generally. Thus we consider strategies aiming to maintain invariants; such strategies can be verified with first-order theorem proving. We propose a sound but incomplete approach to synthesize invariant strategies by adapting the framework of counterexample-guided refinement. The key idea for refinement is to generate a strategy using a model checker for a game constructed from the counterexample, and use it to refine the candidate general strategy. We implemented our method and did experiments with a number of game problems. Our system can successfully synthesize solutions for most of the domains within a reasonable amount of time.

Introduction Video

Sessions where this paper appears

-

Poster Session 2

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

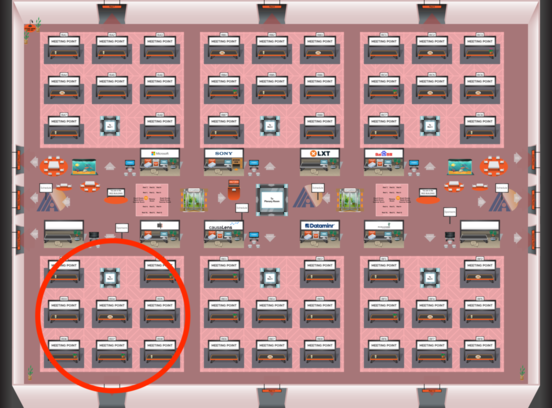

Red 4

Red 4

-

Poster Session 9

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Red 4

Red 4

-

Oral Session 2

Fri, February 25 2:30 AM - 3:45 AM (+00:00)

Fri, February 25 2:30 AM - 3:45 AM (+00:00)

Red 4

Red 4