Abstract:

We investigate the statistical and computational requirements for distributed kernel ridge regression with randomized sketching (DKRR-RS) and successfully achieve the optimal learning rates with only a fraction of computations. More precisely, the proposed DKRR-RS combines sparse randomized sketching, divide-and-conquer and KRR to scale up kernel methods and successfully derives the same learning rate as the exact KRR with only $\mathcal{O}(N^{0.5+\gamma})$ time in expectation, at the basic setting, which outperforms previous state of the art solutions, where $N$ is the number of data and $\gamma\in [0,1]$. Then, for the sake of the gap between theory and experiments, we derive the optimal learning rate in probability for DKRR-RS to reflect its generalization performance. Finally, to further improve the learning performance, we construct an efficient communication strategy for DKRR-RS and demonstrate the power of communications via theoretical assessment. An extensive experiment validates the effectiveness of DKRR-RS and the communication strategy on real-world datasets.

Introduction Video

Sessions where this paper appears

-

Poster Session 2

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

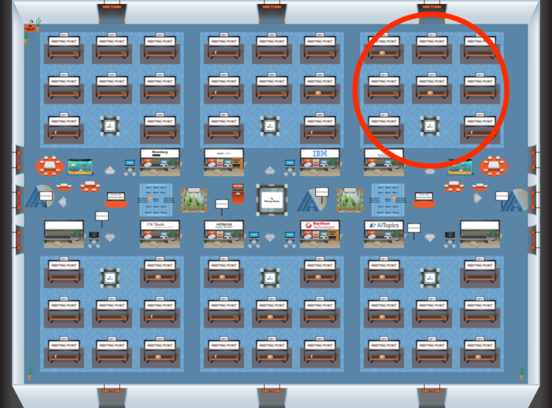

Blue 3

Blue 3

-

Poster Session 11

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Blue 3

Blue 3