How Good Are Low-Rank Approximations in Gaussian Process Regression?

Constantinos Daskalakis, Petros Dellaportas, Aristeidis Panos

[AAAI-22] Main Track

Abstract:

We provide guarantees for approximate Gaussian Process (GP) regression resulting from two common low-rank kernel approximations: based on random Fourier features, and based on truncating the kernel's Mercer expansion. In particular, we bound the Kullback–Leibler divergence between an exact GP and one resulting from one of the afore-described low-rank approximations to its

kernel, as well as between their corresponding predictive densities, and we also bound the error between predictive mean vectors and between predictive covariance matrices computed using the exact versus using the approximate GP. We provide experiments on both simulated data and standard benchmarks to evaluate the effectiveness of our theoretical bounds.

kernel, as well as between their corresponding predictive densities, and we also bound the error between predictive mean vectors and between predictive covariance matrices computed using the exact versus using the approximate GP. We provide experiments on both simulated data and standard benchmarks to evaluate the effectiveness of our theoretical bounds.

Introduction Video

Sessions where this paper appears

-

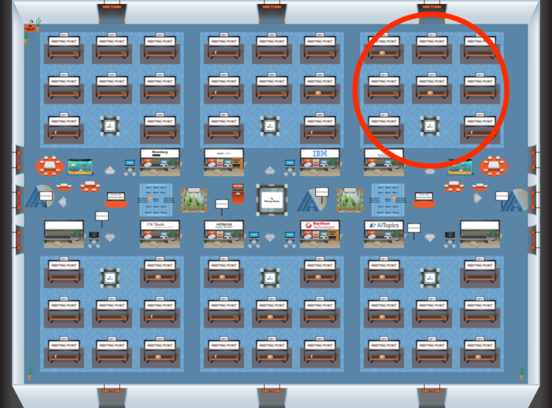

Poster Session 6

Blue 3

Blue 3

-

Poster Session 7

Blue 3

Blue 3

-

Oral Session 7

Blue 3

Blue 3