Trading Complexity for Sparsity in Random Forest Explanations

Gilles Audemard, Steve Bellart, Lounès Bounia, Frédéric Koriche, Jean-Marie Lagniez, Pierre Marquis

[AAAI-22] Main Track

Abstract:

Random forests have long been considered as powerful model ensembles in machine learning. By training multiple decision trees, whose diversity is fostered through data and feature subsampling, the resulting random forest can lead to more stable and reliable predictions than a single decision tree. This however comes at the cost of decreased interpretability: while decision trees are often easily interpretable, the predictions made by random forests are much more difficult to understand, as they involve a majority vote over multiple decision trees. In this paper, we examine different types of reasons that explain “why” an input instance is classified as positive or negative by a Boolean random forest. Notably, as an alternative to prime-implicant explanations taking the form of subset-minimal implicants of the random forest, we introduce majoritary reasons which are subset-minimal implicants of a strict majority of decision trees. For these abductive explanations, the tractability of the generation problem (finding one reason) and the optimization problem (finding one shortest reason) are investigated. Experiments conducted on various datasets reveal the existence of a trade-off between runtime complexity and sparsity. In practice, prime-implicant explanations - for which the identification problem is DP-complete - are slightly larger than majoritary reasons that can be generated using a simple linear-time greedy algorithm. They are also significantly larger than minimum-size majoritary reasons which can be approached using an anytime PARTIAL MAXSAT algorithm.

Introduction Video

Sessions where this paper appears

-

Poster Session 4

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

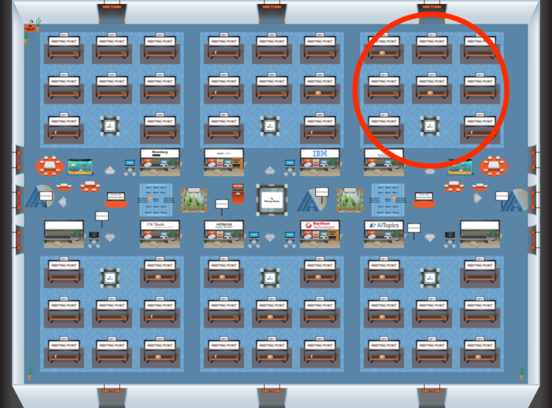

Blue 3

Blue 3

-

Poster Session 9

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Blue 3

Blue 3

-

Oral Session 4

Fri, February 25 6:45 PM - 8:00 PM (+00:00)

Fri, February 25 6:45 PM - 8:00 PM (+00:00)

Blue 3

Blue 3