Learning Network Architecture for Open-Set Recognition

Xuelin Zhang, Xuelian Cheng, Donghao Zhang, Paul Bonnington, Zongyuan Ge

[AAAI-22] Main Track

Abstract:

Given the incomplete knowledge of classes that exist in the world, Open-set Recognition (OSR) enables networks to identify and reject the unseen classes after training. This problem of breaking the common closed-set assumption is far from being solved. Recent studies focus on designing new losses, neural network encoding structures, and calibration methods to optimize a feature space for OSR relevant tasks. In this work, we make the first attempt to tackle OSR by searching the architecture of a Neural Network (NN) under the open-set assumption. In contrast to the prior arts, we develop a mechanism to both search the architecture of the network and train a network suitable for tackling OSR. Inspired by the compact abating probability (CAP) model, which is theoretically proven to reduce the open space risk, we regularize the searching space by VAE contrastive learning. To discover a more robust structure for OSR, we propose Pseudo Auxiliary Searching (PAS), in which we split a pretended set of know-unknown classes from the original training set in the searching phase, hence enabling the super-net to explore an effective architecture that can handle unseen classes in advance. We demonstrate the benefits of this learning pipeline on 5 OSR datasets, including MNIST, SVHN, CIFAR10, CIFARAdd10, and CIFARAdd50, where our approach outperforms prior state-of-the-art networks designed by humans.

Introduction Video

Sessions where this paper appears

-

Poster Session 1

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

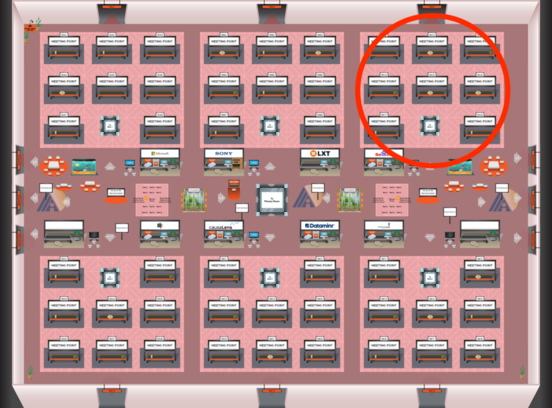

Red 3

Red 3

-

Poster Session 11

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Red 3

Red 3

-

Oral Session 1

Thu, February 24 6:30 PM - 7:45 PM (+00:00)

Thu, February 24 6:30 PM - 7:45 PM (+00:00)

Red 3

Red 3