KAM Theory Meets Statistical Learning Theory: Hamiltonian Neural Networks with Non-Zero Training Loss

Yuhan Chen, Takashi Matsubara, Takaharu Yaguchi

[AAAI-22] Main Track

Abstract:

Many physical phenomena are described by Hamiltonian mechanics using an energy function (Hamiltonian). Recently, the Hamiltonian neural network, which approximates the Hamiltonian by a neural network, and its extensions have attracted much attention. This is a very powerful method, but theoretical studies are limited. In this study, by combining the statistical learning theory and KAM theory, we provide a theoretical analysis of the behavior of Hamiltonian neural networks when the learning error is not completely zero. A Hamiltonian neural network with non-zero errors can be considered as a perturbation from the true dynamics, and the perturbation theory of the Hamilton equation is widely known as KAM theory. To apply KAM theory, we provide a generalization error bound for Hamiltonian neural networks by deriving an estimate of the covering number of the gradient of the multi-layer perceptron, which is the key ingredient of the model. This error bound gives an $L^\infty$ bound on the Hamiltonian that is required in the application of KAM theory.

Introduction Video

Sessions where this paper appears

-

Poster Session 5

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

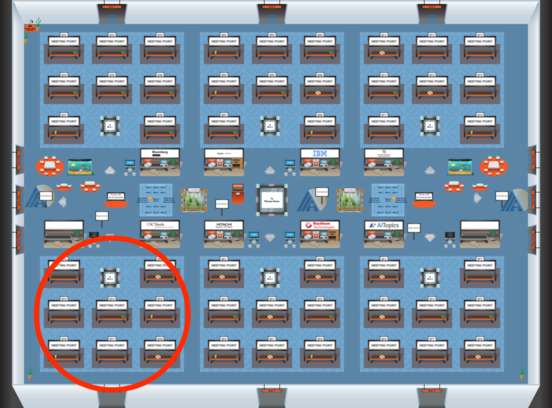

Blue 4

Blue 4

-

Poster Session 7

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Blue 4

Blue 4

-

Oral Session 7

Sat, February 26 6:30 PM - 7:45 PM (+00:00)

Sat, February 26 6:30 PM - 7:45 PM (+00:00)

Blue 4

Blue 4