Retinomorphic Object Detection in Asynchronous Visual Streams

Jianing Li, Xiao Wang, Lin Zhu, Jia Li, Tiejun Huang, Yonghong Tian

[AAAI-22] Main Track

Abstract:

Due to high-speed motion blur and challenging illumination, conventional frame-based cameras have encountered an important challenge in object detection tasks. Neuromorphic cameras that output asynchronous visual streams instead of intensity frames, by taking the advantage of high temporal resolution and high dynamic range, have brought a new perspective to address the challenge. In this paper, we propose a novel problem setting, retinomorphic object detection, which is the first trial that integrates foveal-like and peripheral-like visual streams. Technically, we first build a large-scale multimodal neuromorphic object detection dataset (i.e., PKU-Vidar-DVS) over 215.5k spatio-temporal synchronized labels. Then, we design temporal aggregation representations to preserve the spatio-temporal information from asynchronous visual streams. Finally, we present a novel bio-inspired unifying framework to fuse two sensing modalities via a dynamic interaction mechanism. Our experimental evaluation shows that our approach has significant improvements over the state-of-the-art methods with the single-modality, especially in high-speed motion and low-light scenarios. We hope that our work will attract further research into this newly identified, yet crucial research direction. Our dataset can be available at https://www.pkuml.org/resources/pku-vidar-dvs.html.

Introduction Video

Sessions where this paper appears

-

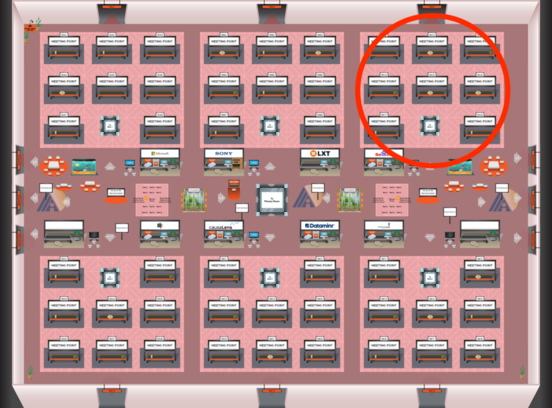

Poster Session 1

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

Red 3

Red 3

-

Poster Session 11

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Red 3

Red 3

-

Oral Session 11

Mon, February 28 2:30 AM - 3:45 AM (+00:00)

Mon, February 28 2:30 AM - 3:45 AM (+00:00)

Red 3

Red 3