Dual Attention Networks for Few-Shot Fine-Grained Recognition

Shu-Lin Xu, Faen Zhang, Xiu-Shen Wei, Jianhua Wang

[AAAI-22] Main Track

Abstract:

The task of few-shot fine-grained recognition is to classify images belonging to subordinate categories merely depending on few examples. Due to the fine-grained nature, it is desirable to capture subtle but discriminative part-level patterns from limited training data, which makes it a challenging problem. In this paper, to generate fine-grained tailored representations for few-shot recognition, we propose a Dual Attention Network (\textsc{Dual Att-Net}) consisting of two dual branches of both hard- and soft-attentions. Specifically, by producing attention guidance from deep activations of input images, our hard-attention is realized by keeping a few useful deep descriptors and forming them as a bag of multi-instance learning. Since these deep descriptors could correspond to objects' parts, the advantage of modeling as a multi-instance bag is able to exploit inherent correlation of these fine-grained parts. On the other side, a soft attended activation representation can be obtained by applying attention guidance upon original activations, which brings comprehensive attention information as the counterpart of hard-attention. After that, both outputs of dual branches are aggregated as a holistic image embedding w.r.t. input images. By performing meta-learning, we can learn a powerful image embedding in such a metric space to generalize to novel classes. Experiments on three popular fine-grained benchmark datasets show that our \textsc{Dual Att-Net} obviously outperforms other existing state-of-the-art methods.

Introduction Video

Sessions where this paper appears

-

Poster Session 2

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

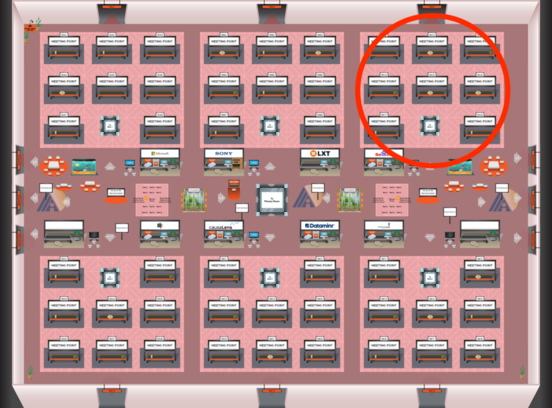

Red 3

Red 3

-

Poster Session 9

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Red 3

Red 3

-

Oral Session 9

Sun, February 27 10:30 AM - 11:45 AM (+00:00)

Sun, February 27 10:30 AM - 11:45 AM (+00:00)

Red 3

Red 3