PrivateSNN: Privacy-Preserving Spiking Neural Networks

Youngeun Kim, Yeshwanth Venkatesha, Priyadarshini Panda

[AAAI-22] Main Track

Abstract:

How can we bring both privacy and energy-efficiency to a neural system on edge devices? In this paper, we propose PrivateSNN, which aims to build low-power Spiking Neural Networks (SNNs) from a pre-trained ANN model without leaking sensitive information contained in a dataset. Here, we tackle two types of leakage problems: 1) Data leakage caused when the networks access real training data during an ANN-SNN conversion process. 2) Class leakage caused when class-related features can be reconstructed from network parameters. In order to address the data leakage issue, we generate synthetic images from the pre-trained ANNs and convert ANNs to SNNs using the generated images. However, converted SNNs remain vulnerable to class leakage since the weight parameters have the same (or scaled) value with respect to ANN parameters. Therefore, we encrypt SNN weights by training SNNs with a temporal spike-based learning rule. Updating weight parameters with temporal data makes networks difficult to be interpreted in the spatial domain. We observe that the encrypted PrivateSNN eliminates data and class leakage issues with slight performance drop (less than ~1\%) and significant energy-efficiency gain (about 60x) compared to the standard ANN. We conduct extensive experiments on various datasets including CIFAR10, CIFAR100, and TinyImageNet, highlighting the importance of privacy-preserving SNN training. The code will be made available.

Introduction Video

Sessions where this paper appears

-

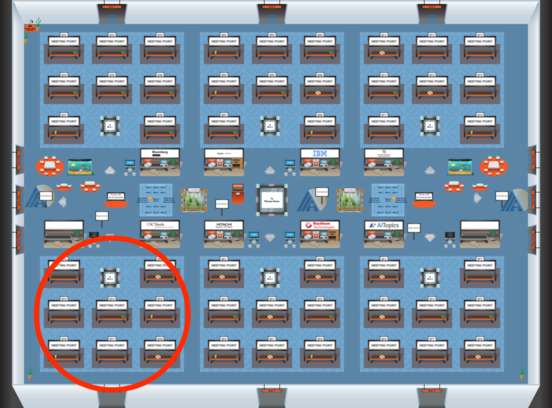

Poster Session 5

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Blue 4

Blue 4

-

Poster Session 7

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Blue 4

Blue 4